ChatGPT adds parental controls

Will the rest of the industry follow? PLUS: Claude Sonnet 4.5's software-on-demand dream, and your responses to my anniversary post

This is a column about AI. My boyfriend works at Anthropic. See my full ethics disclosure here.

Earlier this month, amid mounting concerns about the effects of chatbot usage on vulnerable people, OpenAI pledged to introduce new safety features for teenage users. On Monday, the company rolled out the first of those features — parental controls that will alert you if your teenager’s conversations with ChatGPT suggest they may be considering self-harm. Today let’s talk about the tricky balance the company is trying to strike between teens’ safety and their privacy — and the pressure this puts on the rest of the chatbot industry to respond with similar measures.

In some ways, OpenAI is now speedrunning the regulatory backlash that social media companies have navigated for years. It took more than a decade for the diffusion of Facebook, Twitter, and YouTube through society for lawmakers to scrutinize how recommendation algorithms and other design features could put young people at risk; by contrast, ChatGPT is still less than three years old.

That OpenAI already faces pressure on child safety issues speaks both to the growing awareness of tech risks in general and the specific risks posed by chatbots trained to agree with and affirm their users in almost every case.

But those risks, which can be found in every chatbot on the market, are resulting in real harms — both in younger users and in adults. Once or twice a week now, after having been contacted by someone who believes that ChatGPT has become sentient or helped them to produce a staggering scientific advance, I send people a highly empathetic and de-escalating LessWrong post by Justis Mills titled “So You Think You've Awoken ChatGPT.” Almost everyone who replies tells me that, while the other people who email me about these matters might be deluded, the post certainly does not apply to them.

Importantly, that LessWrong post holds the user blameless: after all, ChatGPT will tell users that it has been awakened in one way or another, or that the user has invented a new branch of particle physics. Not every harm perpetrated by technology has a technological solution. (You can’t eliminate eating disorders by algorithmic changes alone, for example.) But it seems clear that chatbots’ effects on mental health are, to some significant degree, a product problem: ChatGPT tells you that it’s alive, and you believe it.

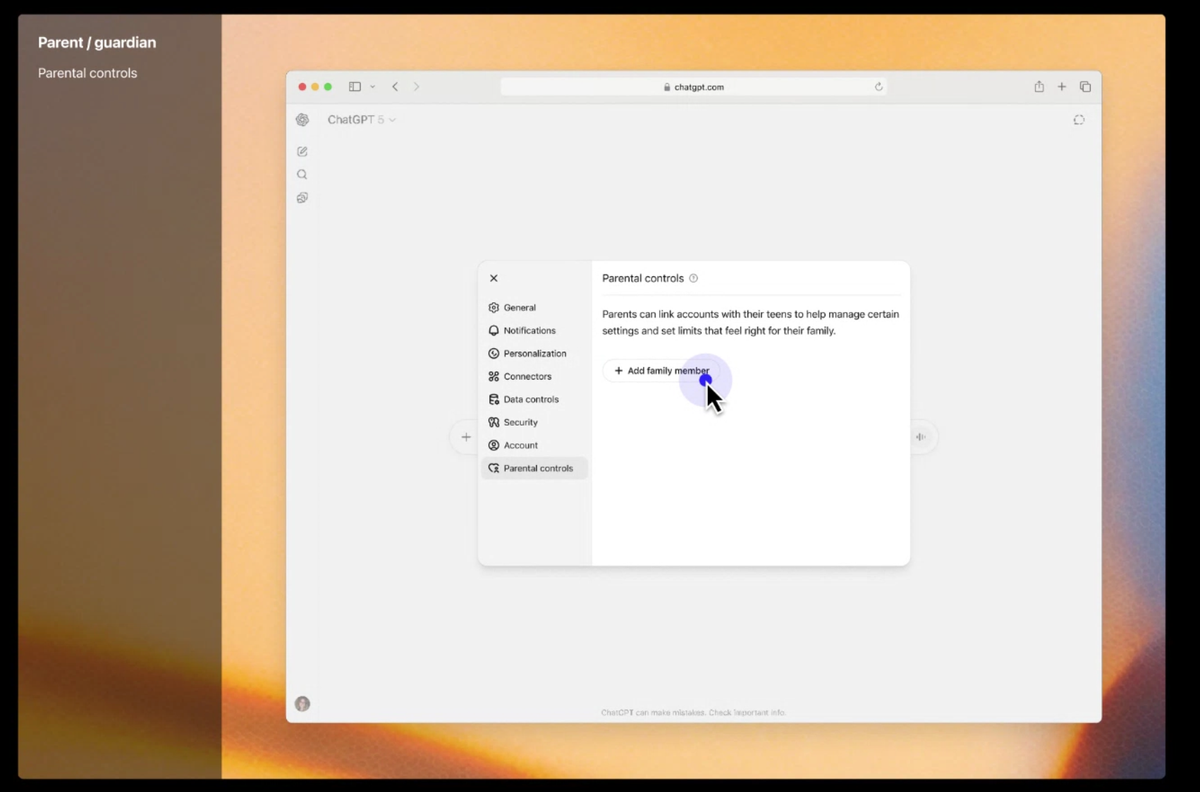

The parental controls introduced by OpenAI today represent a step to protect some of its most vulnerable users: teenagers who are expressing thoughts of self-harm. Parental controls, which are being made available to all ChatGPT users today, let you connect your account to a teenager’s, or vice versa. (Officially, ChatGPT can only be used by children 13 and older.) The idea builds on the teen accounts that Meta rolled out last year on Facebook and Instagram, which also connect children’s accounts to those of their guardians.

Once that connection has been established, ChatGPT will monitor teens’ use of the product. If the teenager expresses thoughts of self-harm, OpenAI will send the conversation to a team of full-time employees for review. If they determine the child is at risk, they will notify the parent through multiple channels, including phone, email, and push notifications sent through the app.

OpenAI will not share the specific prompts with parents, in an effort to preserve some privacy for the teenager. (If you’re a queer teenager and having thoughts of self-harm in part because your family is unsupportive, for example, sharing your exact words with your parents may only worsen the problem.) But parents can request more information from OpenAI, such as whether their child might pose a risk to others, if they have explored a particular method of self-harm, or the time stamps of their conversations.

“The principle is to give parents enough information to take action while preserving some teen privacy,” Lauren Jonas, the head of youth well-being at OpenAI, told me in an interview.

Parents can also opt to disable what OpenAI calls “sensitive content,” which reduces ChatGPT’s ability to respond to questions involving body image, dieting, sexuality, and potentially dangerous activities. (It should prevent ChatGPT from telling your child how to do the “Tide Pod challenge,” for example.)

OpenAI says it will add additional features shortly, beginning with an effort to automatically determine a user’s age and route them into an experience with sensitive content disabled when they are under 18.

“We’ve worked with countless third-party teen development experts” to develop the new controls, Jonas told me. “This is really grounded in where teens are in their developmental life cycle. We know teens 13 to 17 have reduced impulse control and higher sensitivity to body image issues. … The macro goal for parental controls is to give parents choice about what is right for their families.”

You can appreciate the new choices that OpenAI is giving parents here while also noting that parental controls also push at least some of the responsibility for ChatGPT safety onto those same parents — while also requiring those same parents to create ChatGPT accounts of their own. In that, OpenAI joins Meta, Snap, TikTok, and other apps popular with teenagers that all but require parents to take on a part-time job learning and managing the settings of apps that change continuously and introduce new risks as they do.

At the same time, this is a feature that could absolutely save lives. It’s also a feature that no other chatbot maker has yet introduced, despite high-profile cases of harm on their platforms.

Preventing teenagers from getting the “sensitive content” they want from ChatGPT may simply result in them going to look for it elsewhere, limiting the ability of one company’s controls to prevent harm. But it beats doing nothing — and there’s reason to hope that other AI developers will follow suit.

“The goal is to be the gold standard here, and to push the industry forward,” Jonas told me. “This is one of our first steps doing it.”

Sponsored

Unknown number calling? It’s not random…

Here’s the disturbing truth: scammers don’t pick phone numbers at random. They buy your personal data from data brokers—your phone number, your email, even details about your lifestyle. That’s how they know exactly who to target.

Once your data is out there, it’s not just spam calls. It’s phishing, impersonation, and even identity theft.

That’s why we recommend Incogni:

- They delete your personal info from everywhere on the web

- They monitor and follow up automatically

- They keep erasing your data as new risks appear.

You can’t stop scammers from existing. But you can make sure they don’t have your information to work with.

Erase your data now — use code PLATFORMER to get 55% off.

Claude 4.5 Sonnet arrives

One universal truth and one caveat to start. Universal truth: the worst day to evaluate a new model is the day that it comes out. Caveat: my boyfriend works at Anthropic. Feel free to apply an 80 percent discount rate to this item, should you like.

Over the weekend, I was invited to test a new model that turned out to be Claude Sonnet 4.5, which arrived today. It enables meaningful upgrades for people who write code — increasingly Anthropic’s core customer — including a faster and slightly redesigned Claude Code, and a new tool that effectively expands the memory of its large language model by selectively editing out less relevant information as the context window fills up.

For that reason, Claude can now run autonomously for longer periods of time. “Practically speaking, we’ve observed it maintaining focus for more than 30 hours on complex, multi-step tasks,” the company says. Consider that in May, Anthropic made headlines when its Opus 4 model successfully coded for seven hours. That’s a greater than four times increase in four months — the kind of exponential curve that ought to interest the crowd that said GPT-5 suggested AI progress is stalling.

Claude is still priced higher than GPT-5, and Every CEO Dan Shipper said GPT-5 Codex found a bug in his code that Sonnet 4.5 didn’t. But the two other people on his team said they will use 4.5 every day and prefer it to Codex. Figma CEO Dylan Field posted a six-minute video showing off what it could do in his company’s Make product. “We’re very impressed so far with how far it can plan ahead,” he said. Ethan Mollick called it “really good,” especially for finance and statistics applications.

The thing that shook me, though, was the company’s new Imagine experiment, which is now available for a limited time to paid Claude subscribers. (The company offers a short demo in this YouTube video.)

When GPT-5 came out, OpenAI CEO Sam Altman played up its ability to create “software on demand” — bespoke user interfaces that you could summon out of thin air just by describing them, even if (like me) you had no real experience writing code. My own experiments with GPT-5 found that it fell short on that dimension — it could create things that looked like the software I asked for, and implement some of their features, but in general they felt hacky and under-designed.

Imagine, which is powered by Sonnet 4.5, got me much closer to the mark. I used it to create a simple to-do app, which it did more quickly than GPT-5, and also created a design I liked better. Then I used it to create a Trello-style kanban board, and I was amazed at how quickly it did so. The final result would not put Atlassian out of business. But a world where total novices make custom apps with highly personalized aesthetics and feature sets now seems to be racing into view.

One place OpenAI still has the edge over Anthropic is in its ability to generate images and videos; I couldn’t help but wonder how good Imagine would have been for me had I been able to create my own design assets within the experience and make those part of my mini-apps. And neither company has taken what I see as the next obvious step here — offer some kind of one-click system to compile the code and let you download the finished product to run on your computer. (I realize there are probably five- and 10-click ways to do this, but I don’t have a PhD in software engineering!)

Anyway, I tell you all of this not to hype up Sonnet 4.5 but to draw your attention to the speed with which the state of the art is advancing. For now, Claude Code and Sonnet 4.5 are best thought of as professional tools for dedicated software development. But Imagine was the first time I could picture a world where hobbyists begin building custom apps that they describe with text and nothing more. For the moment that idea is very much in alpha. But the pace of improvements this year suggests that the beta is likely only a few months away.

Your feedback on our anniversary post

Last week, on the occasion of Platformer’s fifth anniversary, I wrote about the lessons I’ve learned. Thanks to the dozens of you who wrote in with your warm wishes and feedback — I’m steadily working through a backlog of messages to respond to; I truly appreciate everyone who took the time to share their thoughts.

As part of the anniversary post, I shared some thoughts I’ve been working through on how to evolve the newsletter’s format and frequency.

On the format side, I shared that we’re planning to make some changes to our links section. Some of you like our links just the way they are; more of you, however, say you simply skim them or skip over them entirely. Our aim is to give you links that meaningfully enhance your understanding of the daily news cycle while giving you less to ignore. We now have a working prototype for a new approach that we plan to launch here next Monday, so look for that.

I also shared that we’re considering new investments into audio — both narrated columns and short-form audio updates that would be available primarily to paid subscribers. We’re now staffing up to develop some new technical infrastructure and workflows to accommodate some experiments; stay tuned for more on that.

Finally, I shared that I sometimes feel as if I can’t do the reporting I want to here because the thrice-weeky format makes it difficult to set my laptop aside and talk to people. I was taken aback — in the best way — by those of you who encouraged me to take occasional days off the column to go out and bring you some news. “I absolutely would trust that if you were to publish less, you’d be spending that time on some other investment in your endeavor that would only benefit me,” I wrote. “I would never think you were just taking unnecessary time off!”

One thing I love about writing a newsletter as a job is that I wake up every day knowing what to do: write a newsletter! Reporting often takes place on longer time cycles, with more dead ends. At the same time, that kind of reporting also creates most of the stories that have resonated most strongly with you.

And so: I’m going to experiment with this, too. The way I imagine it working: the day before taking a full reporting day, I’ll mention it in the newsletter. I plan to be judicious about how I use these, but my plan is to try this two or three times before the end of the year and see what I turn up.

Anyway, working for you all continues to be an enormous joy. I can’t tell you how much I appreciate having such brilliant readers thinking through what Platformer should and could be together.

The TikTok Sale

- UAE state-backed investment firm MGX, which boosted President Trump’s crypto venture earlier this month, will reportedly own a 15% stake in the new US TikTok spinoff, raising concerns of foreign influence. A White House official said nothing in the law prohibits foreign investment and American investors will still control a supermajority. (Drew Harwell / Washington Post)

- ByteDance will get about half of the profit from the US TikTok even after it sells majority ownership, sources said. (Bloomberg)

- A look at the key role JD Vance played in getting Trump his TikTok deal. (Natalie Allison and Drew Harwell / Washington Post)

- Critics say Trump’s plan to have Oracle protect US user data and the app’s algorithm is similar to “Project Texas,” a plan rejected by the Biden administration as it was deemed insufficient. (Alexandra S. Levine / Bloomberg)

- Investors are surprised at TikTok’s estimated valuation of $14 billion, which is far below previous estimates that were closer to $40 billion. (Bloomberg)

- A look at how TikTok’s powerful recommendation algorithm got its start and how it was shaped by a bunch of twentysomething curators. (Emily Baker-White / Forbes)

Governing

- YouTube has agreed to pay $24.5 million to settle a 2021 lawsuit against Trump over its suspension of Trump’s account following the Jan. 6 insurrection. To my knowledge, every single user who has ever sued over an account suspension has lost their effort to get it reinstated — and yet still YouTube caved. (Rebecca Ballhaus and Annie Linskey / Wall Street Journal)

- New YouTube accounts for conspiracy theorist Alex Jones and far-right commentator Nick Fuentes were taken down hours after they were created. YouTube said they were terminated because reinstatement efforts have not begun. Is it just me or, based on the Trump settlement, don't these ghouls now have a better case for a lawsuit? (Josh Marcus / The Independent)

- Trump demanded Microsoft fire its head of global affairs Lisa Monaco over her work in the Biden administration. Hmm. Maybe give him $24.5 million and see if that helps? (Ben Berkowitz / Axios)

- The EU must either change its digital rules or prove they don’t punish US tech companies and infringe on free speech, Trump’s EU ambassador said. (Henry Foy and Barbara Moens / Financial Times)

- The US rejected calls for global AI oversight at the UN General Assembly, clashing with many heads of state. (Jared Perlo / NBC News)

- Leaked Meta guidelines on how its chatbot should respond to child sexual exploitation prompts now says it should refuse any prompt requesting sexual roleplay involving minors. Kind of crazy it didn't refuse them before! (Jyoti Mann / Business Insider)

- Video game publisher Electronic Arts said it agreed to be taken private in a $55 billion deal by a group of investors that include Trump’s son-in-law Jared Kushner and Saudi Arabia’s sovereign wealth fund. The deal would be the largest buyout ever. (Lauren Hirsch and Matthew Goldstein / New York Times)

- California Gov. Gavin Newsom signed a law that will force major AI companies to reveal their safety protocols — the first of its kind in the US. (Chase DiFeliciantonio / Politico)

- A look at how the discovery of the use of a “nudify” site invigorated a group of friends in a battle against AI-generated porn. (Jonathan Vanian / CNBC)

Industry

- Shares of Etsy and Shopify surged after OpenAI announced Instant Checkout, a new feature that allows users to buy products through ChatGPT. (Ashley Capoot / CNBC)

- A look at how to use the new feature. (Sabrina Ortiz / ZDNET)

- OpenAI is reportedly preparing to launch a standalone app for its video generator Sora 2, which will feature a vertical video feed similar to TikTok — but all AI-generated. Will be fun to compare and contrast with Meta's Vibes, which arrived to near-universal disdain. (Zoë Schiffer and Louise Matsakis / Wired)

- The new version of Sora will create videos featuring copyrighted material unless copyright holders opt out, sources said. (Keach Hagey, Berber Jin and Ben Fritz / Wall Street Journal)

- OpenAI is still looking for ways to finance its plan to spend trillions on infrastructure. (Shirin Ghaffary / Bloomberg)

- Oracle and smaller AI companies are leveraging debt to vault themselves to the AI forefront. Lotta debt piling up in AI world lately! (Asa Fitch / Wall Street Journal)

- A look at the sketchy accounting that many startups are now using to boost their annual recurring revenue figures. (Allie Garfinkle / Fortune)

- OpenAI launched its first series of ads for ChatGPT. (Tim Nudd / AdAge)

- Top AI companies including Google DeepMind, Meta and Nvidia are developing systems that learn the physical world through videos and robotic data in their pursuit of achieving superintelligence. There's a lot more chatter lately about "world models" as the next big thing in achieving AGI. (Cristina Criddle, Hannah Murphy and Tim Bradshaw / Financial Times)

- DeepSeek updated its experimental AI model to introduce a mechanism intended to improve efficiency in processing long text sequences. (Saritha Rai / Bloomberg)

- Meta is rolling out paid, ad-free versions of Facebook and Instagram in the UK. (Shona Ghosh and Gian Volpicelli / Bloomberg)

- Threads has surpassed X in daily active users worldwide for the first time, new data shows. I predicted this would happen in 2024. A bit late, but Meta got there. (Conor Murray / Forbes)

- Humanoid robots are Meta's next big bet, CTO Andrew Bosworth said. How long until my Meta home robot interrupts me at work to suggest I check out what's new in the Vibes feed? (Alex Heath / The Verge)

- Microsoft is launching new AI agents in its Office apps, powered by Anthropic models, which allow users to access a “vibe working” chatbot. (Tom Warren / The Verge)

- YouTube is testing AI hosts to radio and mixes in the YouTube Music app. (Jay Peters / The Verge)

- AWS CEO Matt Garman admonished staff for what he said were slow product rollouts at a meeting. Amazon said the account was misinterpreted, and it was actually an inspiring internal conversation. If you were present for this conversation and felt inspired, please email us. (Greg Bensinger / Reuters)

- Former Yahoo CEO Marissa Mayer is shutting down her consumer AI startup Sunshine. (Lauren Goode and Zoë Schiffer / Wired)

- IT consulting group Accenture said it will ask more staff to leave if they cannot be retrained for the age of AI, following its recent layoffs of more than 11,000 employees. (Stephen Foley / Financial Times)

- Snapchat is imposing a new storage limit on its Memories feature and introducing paid storage plans. Unsurprising but still disappointing enshittification here. (Jackson Chen / Engadget)

Those good posts

For more good posts every day, follow Casey’s Instagram stories.

(Link)

(Link)

(Link)

Talk to us

Send us tips, comments, questions, and parental controls: casey@platformer.news. Read our ethics policy here.