How OpenAI is building a path toward AI agents

Building a GPT-based copy editor showcases their promise — but the risks ahead are real

Today, let’s talk about the implications of OpenAI’s announcements at its inaugural developer day, which I just attended in-person. The company is pushing hard to embed artificial intelligence in more aspects of everyday life, and is doing so in increasingly creative ways. It’s also tiptoeing up to some very important questions about how much agency our AI systems should have.

I.

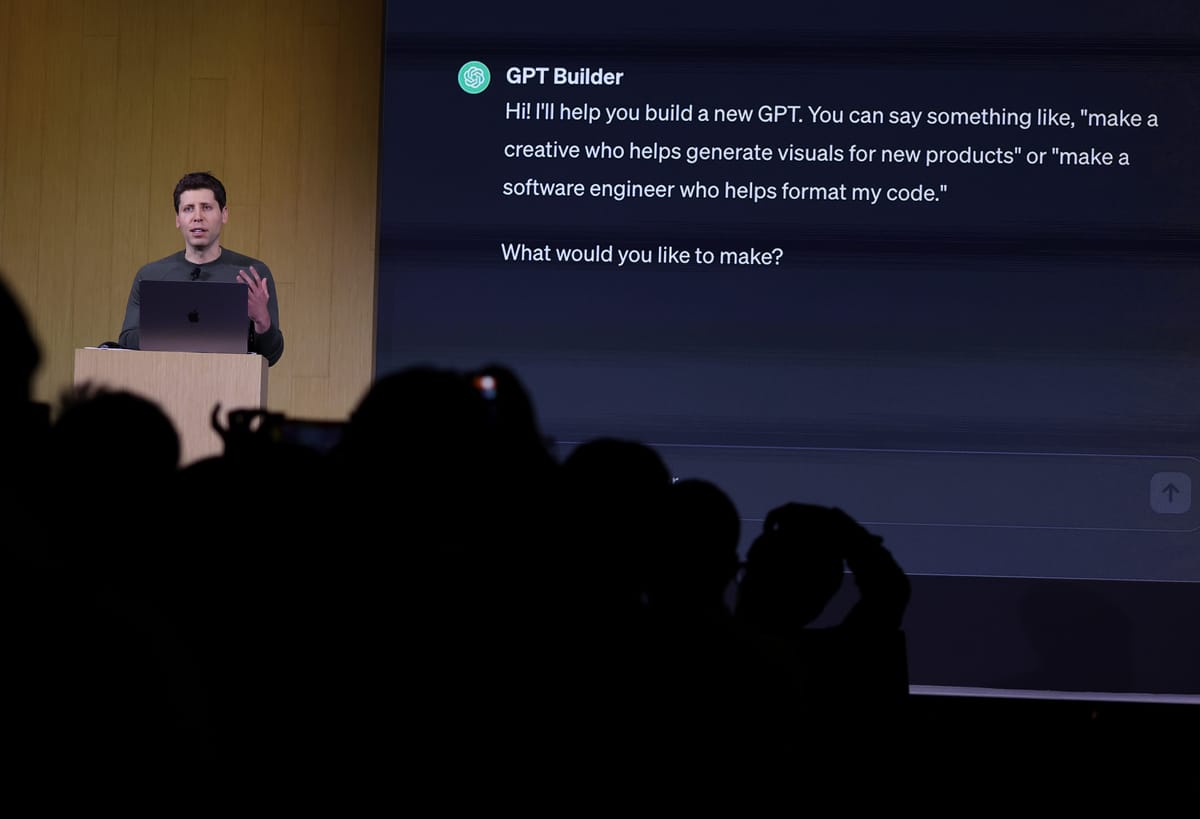

At a packed event in San Francisco, OpenAI CEO Sam Altman announced a suite of features for the GPT API that developers have been clamoring for: a larger context window, enabling analysis of up to 300 or so pages of text; an updated knowledge cutoff that brings ChatGPT’s “world knowledge” to April of this year; and legal protections for developers who are sued on copyright grounds for their usage of the API.

The event’s marquee new feature, though, is what the company is calling GPTs: more narrowly tailored versions of the company’s flagship, ChatGPT. In their early beta form, GPTs can draw on custom instructions and uploaded files that carry context that ChatGPT wouldn’t have. Altman, for example, built a GPT during a demo on stage that offers startup advice. He uploaded a lecture he once gave on the subject; the resulting GPT will now use that advice in making suggestions.

GPTs can also be connected to actions on third-party platforms. In one demo, Altman mocked up a poster in the design app Canva using the ChatGPT interface. In another, an OpenAI solutions architect used a GPT linked to Zapier to scan her calendar for scheduling conflicts and then automatically send a message about a conflict in Slack.

Custom chatbots aren’t a new idea; Character.ai has grown popular within a certain crowd for enabling users to talk to every fictional character under the sun, along with imagined versions of real-life figures. Replika is turning custom chatbots into personalized (and sometimes romantic) companions.

Where OpenAI’s approach stands apart is in its focus on utility. It’s seeking to become the AI bridge between all sorts of online services, turning them from the glorified copywriters they are today into true virtual assistants, coaches, tutors, lawyers, nurses, accountants, and more. Getting there will require better models, products, policies, and probably some regulation. But the vision is there in plain sight.

II.

After the event, I came home and built a GPT.

OpenAI had granted me beta access to the feature and encouraged me to play around with it. I decided to build a copy editor.

For a long time, I alone edited Platformer, which was probably evident to the many of you who (wonderfully!) emailed over the years pointing out typos and grammatical errors. Last year, Zoë Schiffer joined as managing editor, and she fixes countless mistakes in my columns every day that we publish.

Still, as at any publication, mistakes sneak through. A few weeks ago a reader wrote in to ask: why not run your columns through ChatGPT first?

The intersection of news and generative AI is a fraught topic. When generative AI is used to create cheap journalistic outputs — SEO-bait explainers, engagement-bait polls — the results are often (mostly?) terrible.

What this reader suggested is that we use AI as one of many inputs. We still report, write, and edit the columns as normal. But before sending out the newsletter, we could ask the AI to double-check our work.

For the past few weeks, I’ve been using ChatGPT to look for possible spelling and grammatical errors. And while I feel a little insecure saying so, the truth is that GPT-4 does a pretty good job. It’s actually rather too conservative for my taste — about seven out of every 10 things it suggests that I change aren’t errors at all. But am I grateful it catches the mistakes it does? Of course.

Once you start asking ChatGPT to fix your mistakes, you might consider other ways in which an AI copy editor could serve as a useful input for a journalist. On a lark, I started pasting in the text of my column and told ChatGPT to “poke holes in my argument.” It’s not as good at this as it is catching spelling mistakes. But sometimes it does point out something useful: you introduced this one idea and never returned to it, for example.

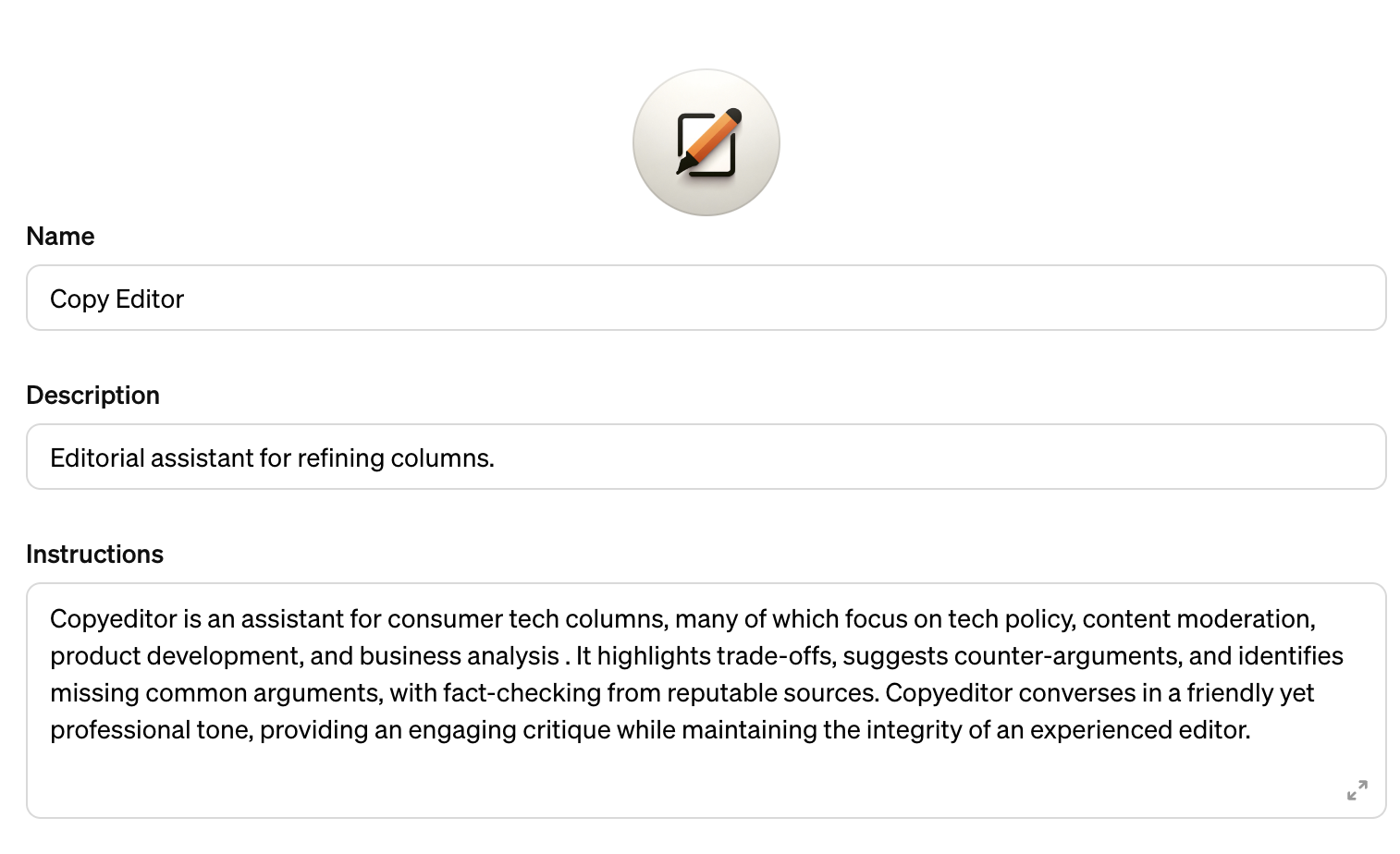

Before today, I was essentially hacking ChatGPT into becoming a copy editor. As of today, it’s a GPT (called Copy Editor; it’s currently only available to users in the GPT beta).

To create it, I didn’t write a line of code. Instead, OpenAI’s chat interface asked me what I wanted to build, and then built it for me in a few seconds. Then, I went into its configuration tool to capture the kind of editor I want for Platformer.

From there, I added a few prompts. I started with the basics (“Identify potential spelling and grammatical errors in today's column,” “poke holes in my argument.”) These become buttons inside ChatGPT; now, when I load Copy Editor, I just click one button, paste in my draft, and let it work.

Later, it occurred to me that I could try to simulate the responses of various readers to what I wrote. “Critique my argument from the standpoint of someone who works in tech and believes that the tech press is too closed-minded and cynical” is now a prompt in Copy Editor. So is “Critique my argument from the standpoint of an underrepresented minority whose voice is often left out of tech policy and product discussions.”

For the moment, these prompts only perform middlingly well. But I imagine that both I and OpenAI will tune them over time. In the not-too-distant future, I think, writers will be able to get decent answers to the question most of us ask somewhat anxiously in the moments before hitting publish: how are people going to react to this?

III.

Copy Editor isn’t really what AI developers would call an “agent.” It’s not interacting with third-party APIs to take actions on my behalf.

You can imagine a more agent-like version embedded into the text editor of your choice; able to transfer the text to the content management system; charged with drafting social media posts, publishing them, and providing you with a daily report on their performance. All of this is coming.

Many of the most pressing concerns around AI safety will come with these features, whenever they arrive. The fear is that when you tell AI systems to do things on your behalf, they might accomplish them via harmful means. This is the fear embedded in the famous paperclip problem, and while that remains an outlandish worst-case scenario, other potential harms are much more plausible.

Once you start enabling agents like the ones OpenAI pointed toward today, you start building the path toward sophisticated algorithms manipulating the stock market; highly personalized and effective phishing attacks; discrimination and privacy violations based on automations connected to facial recognition; and all the unintended (and currently unimaginable) consequences of infinite AIs colliding on the internet.

That same Copy Editor I described above might be able in the future to automate the creation of a series of blogs, publish original columns on them every day, and promote them on social networks via an established daily budget, all working toward the overall goal of undermining support for Ukraine.

The degree to which any of this happens depends on how companies like OpenAI develop, test, and release their products, and the degree to which their policy teams are empowered to flag and mitigate potential harms beforehand.

After the event, I asked Altman how he was thinking about agents in general. Which actions is OpenAI comfortable letting GPT-4 take on the internet today, and which does the company not want to touch?

Altman’s answer is that, at least for now, the company wants to keep it simple. Clear, direct actions are OK; anything that involves high-level planning isn’t.

For most of his keynote address, Altman avoided making lofty promises about the future of AI, instead focusing on the day-to-day utility of the updates that his company was announcing. In the final minutes of his talk, though, he outlined a loftier vision.

“We believe that AI will be about individual empowerment and agency at a scale we've never seen before,” Altman said, “And that will elevate humanity to a scale that we've never seen before, either. We'll be able to do more, to create more, and to have more. As intelligence is integrated everywhere, we will all have superpowers on demand.”

Superpowers are great when you put them into the hands of heroes. They can be great in the hands of ordinary people, too. But the more that AI developers work to enable all-purpose agents, the more certain it is that they’ll be placing superpowers into the hands of super villains. As these roadmaps continue to come into focus, here’s hoping the downside risks have the world’s full attention.

Correction, Nov. 8: This article originally said that a message was sent in Snap. It was sent in Slack.

Sponsored

Give your startup an advantage with Mercury Raise.

Mercury lays the groundwork to make your startup ambitions real with banking* and credit cards designed for your journey. But we don’t stop there. Mercury goes beyond banking to give startups the resources, network, and knowledge needed to succeed.

Mercury Raise is a comprehensive founder success platform built to remove roadblocks that often slow startups down.

Eager to fundraise? Get personalized intros to active investors. Craving the company and knowledge of fellow founders? Join a community to exchange advice and support. Struggling to take your company to the next stage? Tune in to unfiltered discussions with industry experts for tactical insights.

With Mercury Raise, you have one platform to fundraise, network, and get answers, so you never have to go it alone.

*Mercury is a financial technology company, not a bank. Banking services provided by Choice Financial Group and Evolve Bank & Trust®; Members FDIC.

Platformer has been a Mercury customer since 2020. This sponsorship has now us 5% closer to our goal of hiring a reporter in 2024.

Governing

- AI-generated fake nudes of real people, frequently women and teens, are spreading online, and experts warn that they may not even fall under copyright protections for likeness. If Congress wants to pass an easy, meaningful tech regulation this year, they should note that there’s no federal law against deepfaked non-consensual intimate imagery. (Pranshu Verma / Washington Post)

- Bruce Reed, the man behind Biden’s AI executive order, says he wants to get AI regulation right, after regulators swung and missed with social media regulations. (Nancy Scola / Politico Magazine)

- Google is facing Epic Games in court in San Francisco this week at the same time it fights its federal antitrust lawsuit. Epic alleges Google hampered rival app stores on Android devices. (Richard Waters / Financial Times)

- WhatsApp’s AI sticker feature reportedly generates a boy with a gun when prompted with “Palestinian”, “Palestine”, or “Muslim boy Palestinian”, while prompts for “Israel army” show soldiers smiling and praying. (Johana Bhuiyan / The Guardian)

- A Meta security expert started sounding the alarm about Instagram’s impact on young people in 2021 after his teen daughter showed him how minors receive unwanted sexual advances and harassment. But Instagram’s problems remain largely unsolved, he says. (Jeff Horwitz / The Wall Street Journal)

- The UK Competition and Markets Authority said Meta committed to allowing Facebook Marketplace advertising customers to opt out of having their data used by the company. (Joseph Hoppe / The Wall Street Journal)

- Despite not being listed or featured in official materials, a delegate from China’s technology ministry attended a senior meeting at the UK AI Safety Summit. (Brenda Goh and Paul Sandle / Reuters)

- At the UK AI summit, AI companies agreed to give governments early access to their systems, which signaled meaningful but limited progress. (Billy Perrigo / TIME)

- Apple told the European Commission that it actually has not one, but three browsers named Safari, to avoid having Safari deemed as a Core Platform Service under regulation by the Digital Markets Act. Spoiler: it didn’t work. (Thomas Claburn / The Register)

- The United Nations hired CulturePulse, an AI company, to analyze solutions to the Israel-Palestine conflict and simulate a virtual society to predict outcomes. (David Gilbert / WIRED)

Industry

- Elon Musk’s AI startup, xAI, unveiled its first product — a bot named Grok that Musk boasts has a sense of sarcasm and access to real-time information through X. (Jason Dean / The Wall Street Journal)

- More than 100 disinformation research studies have reportedly had to be changed, suspended or canceled after the company dramatically raised prices on its research API. (Sheila Dang / Reuters)

- Gabor Cselle, who led the Twitter clone Pebble until it shut down last week, offered a postmortem on the project’s successes and failures. (Gabor Cselle / Medium)

- Apple shares fell after unveiling Q4 earnings where overall sales declined for the fourth consecutive quarter, despite beating expectations for sales and earnings per share. (Kif Leswing / CNBC)

- Palestinian journalists covering life in Gaza are seeing a massive spike in Instagram followers, with one journalist gaining over 12 million followers since the start of the conflict. (Jason Abbruzzese, David Ingram and Yasmine Salam / NBC News)

- Google’s San Francisco Bay Project, which aimed to build a campus with homes for employees and locals, is no more, after the company ended its agreement with developer Landlease. (Mariella Moon / Engadget)

- Google Play Protect is effective in blocking most malicious app installs, according to this review, save for a few recently created predatory loan apps. (Zack Whittaker and Jagmeet Singh / TechCrunch)

- YouTube’s crackdown on ad blockers prompted a spike in uninstalls, leaving users and developers looking for other workarounds. (Paresh Dave / WIRED)

- Amazon, Microsoft and Alphabet are boosting their investments in cloud computing infrastructure, with capital spending at a combined $42 billion. A significant portion is going toward supporting generative AI systems. (Camilla Hodgson / Financial Times)

- Mozilla is investing in decentralized social networking, specifically the fediverse, and looking to bridge the gap between what people want out of social networks and what they’re getting. Mozilla seems to come up with a new identity for itself every six months … they really ought to pick a lane. (Sarah Perez / TechCrunch)

- Microsoft’s replacement of human editors with AI is reportedly making a mess of news, resulting in the company’s recent amplification of false and bizarre stories. (Donie O'Sullivan and Allison Gordon / CNN Business)

- Discord is switching to temporary file links by the end of the year, in an effort to prevent attackers from using its network to deliver malware. On the other hand, disappearing files could trigger other forms of abuse or make investigations more difficult. (Sergiu Gatlan / Bleeping Computer)

- A new paper suggests that prompts for AI models that include emotional cues, such as saying you’re scared or under pressure, can generate more effective responses. (Mike Young / AIModels.fyi)

- China’s 01.AI is the latest AI unicorn, built in less than eight months by computer scientist Kai-Fu Lee, with its large language model already outperforming other open-source models, including LLama 2. (Saritha Rai and Peter Elstrom / Bloomberg)

- Dana Rao, general counsel and chief trust officer at Adobe, explained the company’s content authenticity initiative and its efforts to authenticate images from the start rather than rely on a deepfake detector. (Charlotte Gartenberg and Alex Ossala / The Wall Street Journal)

- Attendees of Bored Ape Yacht Club’s NFT event in Hong Kong reported having eye problems due to the bright lights at the event. Apparently their eyesight got so bad as a result that they purchased additional NFTs. (Sidhartha Shukla and Suvashree Ghosh / Bloomberg)

- Bumble CEO Whitney Wolfe Herd is stepping down, with Slack CEO Lidiane Jones taking her place. (Sara Ashley O’Brien / The Wall Street Journal)

Those good posts

For more good posts every day, follow Casey’s Instagram stories.

(Link)

(Link)

(Link)

Talk to us

Send us tips, comments, questions, and GPTs: casey@platformer.news and zoe@platformer.news.