What Instagram really learned from hiding like counts

PLUS: WhatsApp sues India

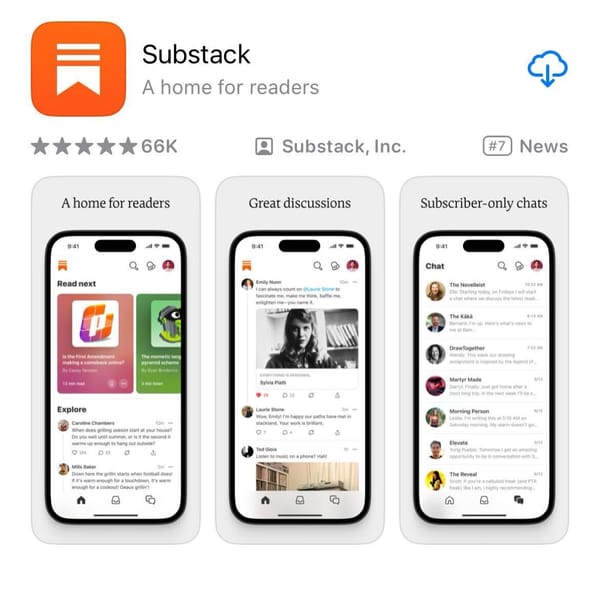

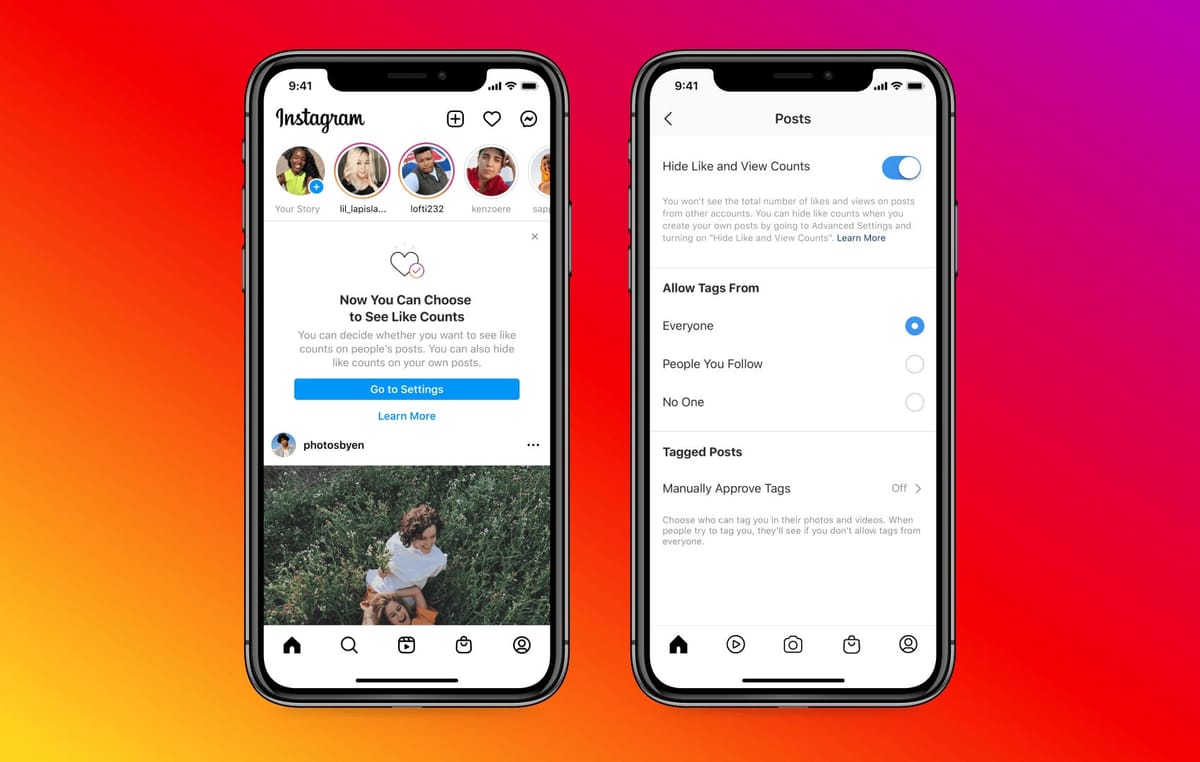

In April 2019, amid growing questions about the effects of social networks on mental health, Instagram announced it would test a feed without likes. The person posting an image on the network would still see how many people had sent it a heart, but the total number of hearts would remain invisible to the public.

“It’s about young people,” Instagram chief Adam Mosseri said that November, just ahead of the test arriving in the United States. “The idea is to try and depressurize Instagram, make it less of a competition, give people more space to focus on connecting with people that they love, things that inspire them. But it’s really focused on young people.”

After more than two years of testing, today Instagram announced what it found: removing likes doesn’t seem to meaningfully depressurize Instagram, for young people or anyone else, and so likes will remain publicly viewable by default. But all users will now get the ability to switch them off if they like, either for their whole feed or on a per-post basis.

“What we heard from people and experts was that not seeing like counts was beneficial for some, and annoying to others, particularly because people use like counts to get a sense for what’s trending or popular, so we’re giving you the choice,” the company said in a blog post.

At first blush, this move feels like a remarkable anticlimax. The company invested more than two years in testing these changes, with Mosseri himself telling Wired he spent “a lot of time on this personally” as the company began the project. For a moment, it seemed as if Instagram might be on the verge of a fundamental transformation — away from an influencer-driven, social-media reality show toward something more intimate and humane.

In 2019, this no-public-metrics, friends-first approach had been perfected by Instagram’s forever rival, Snapchat. And the idea of stripping out likes, view counts, followers and other popularity scoreboards gained traction in some circles — the artist Ben Grosser’s Demetricator project made a series of tools that implemented the idea via browser extensions, to positive reviews.

So what happened at Instagram?

“It turned out that it didn't actually change nearly as much about … how people felt, or how much they used the experience as we thought it would,” Mosseri said in a briefing with reporters this week. “But it did end up being pretty polarizing. Some people really liked it, and some people really didn't.”

On that last point, he added: “You can check out some of my @-mentions on Twitter.”

While Instagram ran its tests, a growing number of studies found only limited evidence linking the use of smartphones or social networks to changes in mental health, the New York Times reported last year. Just this month, a 30-year study of teenagers and technology from Oxford University reached a similar finding.

Note that this doesn’t say social networks are necessarily good for teenagers, or anyone else. Just that they don’t move the needle very much on mental health. Assuming that’s true, it stands to reason that changes to the user interface of individual apps would also have a limited effect.

At the same time, I wouldn’t write off this experiment as a failure. Rather, I think it highlights a lesson that social networks are often too reluctant to learn: rigid, one-size-fits-all platform policies are making people miserable.

Think of the vocal minority of Instagram users would like to view their feed chronologically, for example. Or the Facebook users who want to pay to turn off ads. Or look at all the impossible questions related to speech that are decided at a platform level, when they would better resolved at a personal one.

Last month, Intel was roasted online after showing off Bleep, an experimental AI tool for censoring voice chat during multiplayer online video games. If you’ve ever played an online shooter, chances are you haven’t gone a full afternoon without being subjected to a barrage of racist, misogynist, and homophobic speech. (Usually from a 12-year-old.) Rather than censor all of it, though, Intel said it would put the choice in users’ hands. Here’s Ana Diaz at Polygon:

The screenshot depicts the user settings for the software and shows a sliding scale where people can choose between “none, some, most, or all” of categories of hate speech like “racism and xenophobia” or “misogyny.” There’s also a toggle for the N-word.

An “all racism” toggle makes us understandably upset, even if hearing all racism is currently the default for most in-game chat today, and the screenshot generated many worthwhile memes and jokes. Intel explained that it built settings like these to account for the fact that people might accept hearing language from friends that they won’t from strangers.

But the basic idea of sliders for speech issues is a good one, I think. Some issues, particularly related to non-sexual nudity, vary so widely across cultures that it forcing one global standard on them — as is the norm today — seems ludicrous. Letting users build their own experience, from whether their like counts are visible to whether breastfeeding photos appear in their feed, feels like the clear solution.

There are some obvious limits here. Tech platforms can’t ask users to make an unlimited number of decisions, as it introduces too much complexity into the product. Companies will still have to draw hard lines around tricky issues, including hate speech and misinformation. And introducing choices won’t change the fact that, as in all software, most people will simply stick with the defaults.

All that said, expanded user choice is clearly in the interest of both people and platforms. People can get software that maps more closely to their cultures and preferences. And platforms can offload a series of impossible-to-solve riddles from their policy teams to an eager user base.

There are already signs beyond today that this future is arriving. Reddit offered us an early glimpse with its policy of setting a hard “floor” of rules for the platform, while letting individual subreddits raise the “ceiling” by introducing additional rules. Twitter CEO Jack Dorsey has forecast a world in which users will be able to choose from different feed ranking algorithms.

With his decision on likes, Mosseri is moving in the same direction.

“It ended up being that the clearest path forward was something that we already believe in, which is giving people choice,” he said this week. “I think it's something that we should do more of.”

WhatsApp sues India

What will become of American social networks in India? It’s a question I’m exploring more and more in Platformer as the Modi government’s free-speech crackdown escalates; just this week, police raided Twitter’s (empty) Delhi headquarters in an attempt to bully the company into submission.

While the outcome is uncertain, the basic path of these conflicts usually leads to the same place: a courtroom. And indeed, today Facebook-owned WhatsApp sued the Indian government. Here’s Joseph Menn at Reuters:

The case asks the Delhi High Court to declare that one of the new IT rules is a violation of privacy rights in India's constitution since it requires social media companies to identify the "first originator of information" when authorities demand it, people familiar with the lawsuit told Reuters. […]

WhatsApp says that because messages are encrypted end-to-end it would have to break encryption for receivers of messages as well as the originators to comply with the new law.

WhatsApp told Reuters: “Requiring messaging apps to 'trace' chats is the equivalent of asking us to keep a fingerprint of every single message sent on WhatsApp, which would break end-to-end encryption and fundamentally undermines people's right to privacy."

I’m with WhatsApp on this one; I wrote here in March about how India’s moves threatened to break encryption worldwide. It’s particularly galling that the Modi government’s stated rationale for the move is tracking “originators of disinformation” — a statement made the same week that it raided Twitter’s offices because the company accurately labeled a party spokesman’s tweet that had been Photoshopped.

Here’s hoping that WhatsApp prevails in court; I find the alternative almost too bleak to consider. And in the meantime, if Modi’s government is so worried about “originators of disinformation,” it could start by no longer tweeting faked documents.

The Ratio

Today in news that could affect public perception of the big tech companies.

⬆️ Trending up: Platforms have largely been successful in removing most QAnon content from the mainstream internet, a new report found. “Of all factors, data analyzed by the DFRLab found that reductions correlated most strongly with social media actions taken by Facebook, Twitter, and Google to limit or remove QAnon content.” (Jared Holt and Max Rizzuto / DFRLab)

⬇️ Trending down: Oracle peddled its social media surveillance tech to police departments in China. This despite the fact that it is partially funded by the CIA. (Mara Hvistendahl / The Intercept)

⬇️ Trending down: YouTube allowed a pro-government Belarus TV channel to run ads featuring hostage videos of detained activist Roman Protasevich and his girlfriend, Sofia Sapega. Belarus has long used YouTube ads to spread propaganda, journalists there say; these ads were removed. (Louise Matsakis / Rest of World)

Governing

⭐ Facebook will start reducing the reach of personal pages that repeatedly share misinformation. Here’s Kurt Wagner at Bloomberg:

Under the new system, Facebook will “reduce the distribution of all posts” from people who routinely share misinformation, making it harder for their content to be seen by others on the service. The company already does this for Pages and Groups that post misinformation, but it hadn’t previously extended the same policy to individual users. Facebook does limit the reach of posts that have been flagged by fact-checkers, but there wasn’t a broader penalty for account holders who share misinformation. […]

The Menlo Park, California-based company will also start showing users a pop-up message if they click to “like” a page that routinely shares misinformation, alerting them that fact-checkers have previously flagged that page’s posts. “This will help people make an informed decision about whether they want to follow the Page,” the company wrote in a blog post.

The European Commission is expected to formally ask Facebook, Twitter, and Twitter to alter their algorithms to prevent the spread of misinformation, and “prove that they have done so.” It’s unclear to me whether this would essentially be expanded transparency reporting requirements, or some kind of under-the-hood examination of algorithms by regulators. (Mark Scott / Politico)

The European Union is preparing a formal antitrust investigation into Facebook focused on its Marketplace product. “The probe, which stems from complaints from competitors, looks at least in part at how Facebook allegedly favors Marketplace … at the expense of other companies that sell products through Facebook.” (Sam Schechner / Wall Street Journal)

Facebook named the United States as one of the top five countries where influence operations originate. In a new white paper about the past three years of influence operations, America “ranked fourth on Facebook's list, behind Russia, Iran and Myanmar and just ahead of Ukraine.” (Issie Lapowsky / Protocol)

A look at Russia’s dramatic escalation of content removal demands, focused on Facebook, Twitter, and Google. “On Wednesday, the government ordered Facebook and Twitter to store all data on Russian users within the country by July 1 or face fines.” (Adam Satariano and Oleg Matsnev / New York Times)

A group of Israeli journalists called on Facebook and Twitter to take “decisive action” after a wave of threats against them posted online in retaliation for covering the conflict with Palestine. “In some cases, the incitement resulted in deliberate attacks on reporters while reporting, the letters alleged.” (Steven Scheer / Reuters)

Gaza-based journalists say their accounts were blocked by WhatsApp. “According to the Associated Press, 17 journalists in Gaza confirmed their WhatsApp accounts had been blocked since Friday.” (Al Jazeera)

Australia is investigating OnlyFans for potential links to financial crime. The country’s financial crimes watchdog is looking into issues including whether minors are being featured in sexually explicit content. (Gerald Cockburn / New Zealand Herald)

The city of San Jose approved Google’s plan for a huge downtown campus after three years of negotiations. “With the vote on Tuesday, Google can move forward with an 80-acre development plan near San Jose’s central rail hub at Diridon Station, including 4,000 new homes, more than 7 million square feet of office space, 15 acres of parks and 500,000 square feet of retail and other space.” (Lauren Hepler / San Francisco Chronicle)

The Delhi High Court ordered that one of its own rulings be removed from Google search results. After a US citizen of Indian origin was acquitted of a drug crime, he complained that search results for his name made it impossible for him to get a job. (Abhinav Garg / Times of India)

A hacktivist posted a massive scrape of the crime app Citizen to the dark web. The data covers 1.7 million incidents, and may be of interest to journalists and academics. (Joseph Cox / Vice)

Industry

⭐ Amazon will buy MGM Studios for $8.45 billion. It’s the company’s second-biggest acquisition after Whole Foods. Here’s Annie Palmer at CNBC:

Amazon said it hopes to leverage MGM’s storied filmmaking history and wide-ranging catalog of 4,000 films and 17,000 TV shows to help bolster Amazon Studios, its film and TV division.

“The real financial value behind this deal is the treasure trove of IP in the deep catalog that we plan to reimagine and develop together with MGM’s talented team,” said Mike Hopkins, senior vice president of Prime Video and Amazon Studios. “It’s very exciting and provides so many opportunities for high-quality storytelling.”

Andy Jassy will become Amazon CEO on July 5, 27 years to the day after the company was incorporated. The announcement was made at the company’s annual shareholder meeting. (Jay Peters / The Verge)

Related: Jeff Bezos said the retail industry is far more competitive than the smartphone industry during the shareholder meeting today. “Think about mobile phone operating systems. Can you think of any successful, small, fast growing mobile phone operating systems? Where are they? Name one. They do not exist.” (Matt Day / The Verge)

Instagram launched a new section for e-commerce “drops.” Flash sales offering limited inventory have grown in popularity, and now they’re part of the Shop tab. Instagram will eventually collect fees from the sales. (Sarah Perez / TechCrunch)

TikTok changed the voice of its text-to-speech voice after the original voice actor sued. Bev Standing argued her original voice work was only meant for translations. (Jacob Kastrenakes / The Verge)

Google struck a deal with national hospital chain HCA Healthcare to develop healthcare algorithms using patient records. “Google and HCA engineers will work to develop algorithms to help improve operating efficiency, monitor patients and guide doctors’ decisions, according to the companies.” (Melanie Evans / Wall Street Journal)

Clubhouse unveiled the first participants in its Creator First accelerator. An admirably diverse group, I’ll say that much. (Clubhouse)

Tiger Global led a $30 million investment in Koo, a Twitter alternative in India. Something to fill the void for when Twitter is eventually banned there. (Manish Singh / TechCrunch)

Those good tweets

Airbnb got too much dip on they chip. They’re about to fall off. No one is gonna continue to pay $500 to stay in an apartment for two days when they can pay $300 for a hotel stay that has a pool, room service, free breakfast & cleaning everyday. Like get real lol

— 🥀 (@euphorixa) 3:50 PM ∙ May 18, 2021

what if f scott fitzgerald came back up to earth and said “it’s pronounced jatsby” and left

— anja (@internetanja) 8:41 PM ∙ May 22, 2021

“fuck around and find out” is literally the scientific method

— zellie (@zellieimani) 5:23 AM ∙ May 25, 2021

“Shark infested waters”.... you mean their home????😭

— ⱫłØ₦👑 (@blackisjesus_) 6:35 AM ∙ Jun 30, 2020

Talk to me

Send me tips, comments, questions, and Instagram likes: casey@platformer.news.