Nick Clegg tries to reset the conversation

Facebook's VP of global affairs talks to us about algorithms, polarization, and the Trump decision

The United States has changed profoundly in the weeks since Donald Trump was dislodged from office and Joe Biden assumed the role. The rate of vaccinations keeps accelerating, a national recovery act has passed, and a press corps suddenly bereft of scandal has found itself covering the droppings of the First Dogs.

In at least one respect, though, not much has changed as all: the last administration didn’t like Facebook, and the current one really doesn’t either.

Broad bipartisan disdain for Facebook has been a theme in the five Congressional hearings involving the company since last summer. It has manifested itself in multiple ongoing antitrust lawsuits, privacy inquiries, and other skirmishes around the world. (India is currently suing the company to prevent WhatsApp from changing its terms of service, to name just one example.)

Meanwhile, the idea that Facebook’s design rewards polarization and is fraying democracy is now firmly embedded in pop culture: The Social Dilemma, while a terrible movie, also seems to have been a bona fide hit for Netflix.

It’s in this context that I spoke to Nick Clegg. Today Clegg, who joined Facebook as its head of global affairs in October 2018, published “You and the Algorithm: It Takes Two to Tango,” a 5,000-word blog post that sets out to reset the conversation about Facebook on terms more favorable to the company.

It’s a post that acknowledges the way ranking systems can create fear and uncertainty while also making the case that individual users ultimately are, and should be, in control of what they see.

“Faced with opaque systems operated by wealthy global companies, it is hardly surprising that many assume the lack of transparency exists to serve the interests of technology elites and not users,” Clegg writes. “In the long run, people are only going to feel comfortable with these algorithmic systems if they have more visibility into how they work and then have the ability to exercise more informed control over them.”

To that end, the company is making it easier to reduce the amount of algorithmic ranking in the News Feed, if you don’t trust Facebook or simply want to build your own rankings. Here’s James Vincent at The Verge:

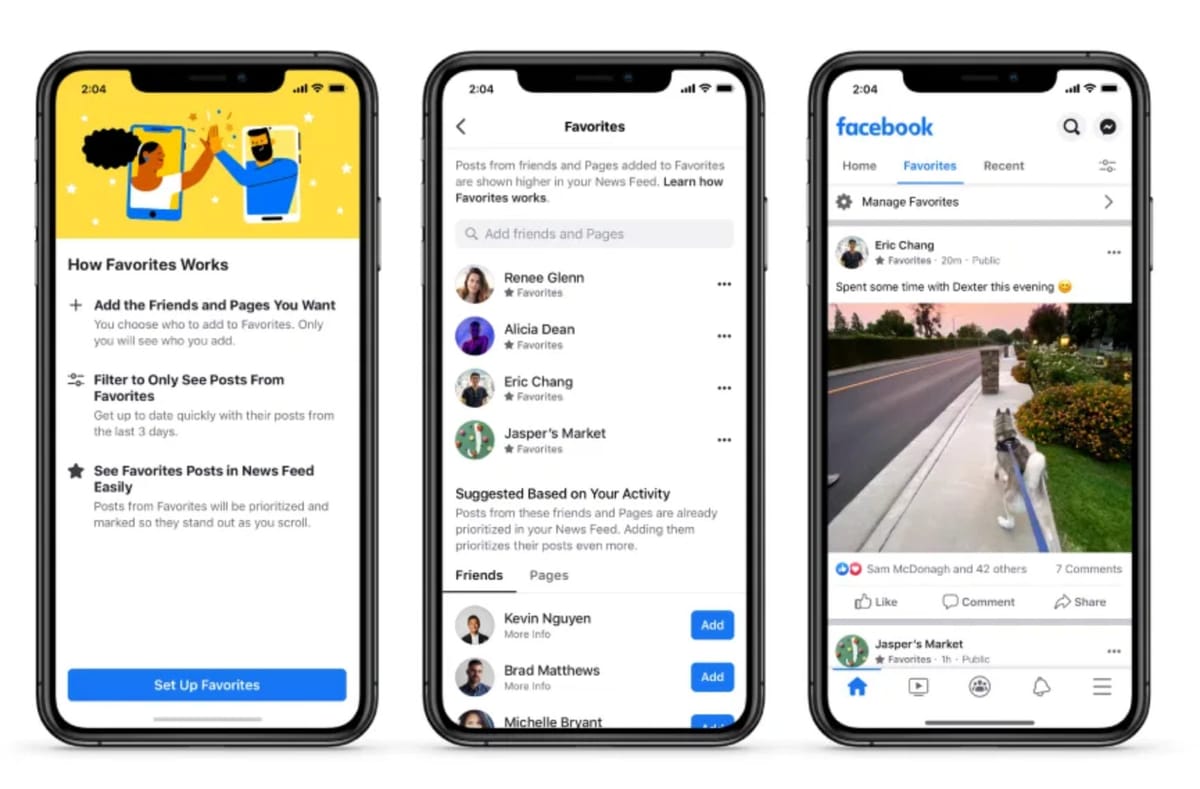

The changes build on previous tweaks to News Feed functionality. Last October, Facebook introduced a “Favorites” tool that allows users to select up to thirty friends and pages, prioritizing their content or displaying it in a separate feed. The company also offers users the option to sort their feeds by “most recent,” but buries these options in obscure menus.

Facebook is now making these “Favorites” and “Recent” filters much more prominent, putting them right at the top of the News Feed as separate tabs that users can switch between.

You can see the filters in the screenshot at the top of this post.

It’s too soon to tell how much additional control Facebook will ultimately give users over their feeds. Philosophically, at least, it could represent a step in the right direction. Don’t like our algorithms? Bring your own! Twitter has talked about eventually making available to us some sort of “algorithmic app store”; Facebook has brought it a step closer to reality.

That’s the easy part, though. There are so many more tough questions about Facebook. Isn’t it incentivized to show us more polarizing content? Isn’t that having some sort of broader effect on the user base, and on our politics? How does the company justify saying that it is a net positive for the world?

For that, I had to get Clegg on the phone — or, rather, the Zoom. My friend Nilay Patel was gracious enough to let me crash his excellent interview podcast, Decoder, and spend 40 minutes or so asking Clegg about what’s at stake here.

A few highlights of my conversation with are below, edited for length. But I really encourage you to listen to the whole thing — or at least read the full transcript, which should be posted at that link by Thursday morning.

As always, let me know what you think.

Casey Newton: I suspect if there’s one sentence in your piece that most people will take issue with, it’s when you write, “Facebook’s systems are not designed to reward provocative content.” At the same time, when we look at lists of pages that get the most engagement, it does tend to be pages that seem to be pushing really polarizing content. So how do you reconcile this at Facebook?

Nick Clegg: Well, firstly, I of course accept that we need to just provide more and more data and more evidence about what is the specific content that is popular on News Feed. And then of course, although Facebook’s critics often talk about sensational content dominating News Feed, of course we want to show, as I think we can, that many of the most popular posts on News Feed are lighthearted. They’re feel-good stories. We want to show people that the overwhelming majority of the posts people see on News Feed are about pets, babies, vacations, and similar. Not incendiary topics. In fact, I think on Monday, one most popular posts in the US was a mother bear with three or four baby cubs crossing a road. I saw it myself. It’s lovely. I strongly recommend that you look at it. And I think we can, and we will, do more to substantiate that.

But the signals that are used in the ranking process are far more complex, are far more sophisticated, and have far more checks and balances in it than are implied by this cardboard cutout caricature that somehow we’re just spoon-feeding people incendiary, sensational stuff. And I’m happy to go into the details if you like, but thousands of signals are used, literally from the device that you use to the groups that you’re members of and so on. We survey evidence. We’re using more and more survey evidence. We’ll be doing more of that in the future as well to ask people what they find most meaningful. There’s been a big shift in recent years anyway to reward content that is more meaningful, your connections with your families and friends, rather than stuff that is just crudely engaging — pages from politicians and personalities and celebrities and sports pages and so on.

So that shift has already been underway. But in terms of incentives, this is the bit that maybe we have not been articulate enough about. Firstly, the people who pay our lunch don’t like the content next to incendiary, unpleasant material. And if you needed any further proof of that, this last summer, a number of major advertisers boycotted Facebook because they felt we weren’t doing enough on hate speech. We were getting much better at reducing the prevalence of hate speech. The prevalence of hate speech is now down to, what? 0.07, 0.08 percent of content on Facebook. So every 10,000 pieces of content you see, seven or eight might be bad. I wish it was down to zero. I don’t think we’ll ever get it down to zero. So we have a massive incentive to do that.

But also if you think about it, if you’re building a product which you want to survive for the long term, where your people in 10 years, in 15 years, in 20 years to still be using these products, there’s really no incentive for the company to give people the kind of sugar rush of artificially polarizing content, which might keep them on board for 10 or 20 minutes extra. Now, we want to solve for 10 or 20 years, not for 10 or 20 extra minutes. And so I don’t think our incentives are pointed in the direction that many people assume.

So let's turn to another really important subject that you write about in this piece, which is polarization. I think a lot of folks I talk to take for granted the idea that social networks accelerate what researchers call negative affective polarization, which is just basically the degree to which one group dislikes another.

But as you point out in the piece, and I have done some writing on, the research here, while limited, is mixed. But I do think it strongly suggests that polarization at least has many causes, and some of those do predate the existence of social networks.

At the same time, I think all of us have had the experience of getting on Facebook and finding ourselves in a fight over politics or observing one among friends and family. And we read the comments and everyone digs in their heels and it ends when they all unfriend each other or block each other. And so what I want to know is do you feel that those moments just aren't collectively as powerful as they might feel to us, individually? Or is there something else going on that explains Facebook's case that it is not a polarizing force?

As you quite rightly said, Casey, this is now such a — it's like almost a given. I just hear people literally just make this throwaway remark: "Well, of course social media is the principal reason for polarization." It's just become this settled sediment layer in the narratives. So believe you me, I'm trying to tread really carefully here because when you interrupt people's narratives … folks don't like it.

And that's why I'm very deliberate in the piece not to cite anything that Facebook itself has generated. Of course, we do research. We commission research. This is all third-party research. And I choose my words very carefully: I say that the results of that research, that independent academic research, is mixed. It really is very mixed.

And in answer to your question, I think the reason perhaps why there is this dissonance now between academic and independent research, which really doesn't suggest that social media is the primary driver of polarization after all, and the assumptions, I think there's a number of reasons. It's partly one of geography. I mean, candidly, a lot of the debate is generated by people using social media amongst the coastal policy and media elites in the U.S.

But let's remember, nine out of ten Facebook users are outside the U.S. And they have a completely different experience. They live in a completely different world. And the Pew Study in 2019, which is really worth looking at for those who are interested, looked at people using social media in a number of countries, not the least a number of countries in the developing world.

There they found overwhelming evidence for them, for millions, perhaps billions of people in those countries, who were not living through this peculiar political time in the U.S. They actually were using social media to experience people of different communities, different countries, different religions, different viewpoints, on a really significant scale.

Stanford research … looked at nine countries over 40 years, and found that in many of those countries, polarization preceded the advent of social media. And in many of the countries, polarization was flat or actually declined even as the use of social media increased. So I think there's an issue of geography. There's an issue of time, where we're kind of, candidly, losing a little bit of sense of a perspective. […]

I don't want to somehow pretend that social media does not play a part in all of this. Of course it does, but I do hope I can make a contribution and say, "Look, we stepped back a bit." We're starting to reduce some quite complex forces that are driving cultural, socioeconomic, and political polarization in our society to just one form of communication.

That's fair. I'll also tell you my fear, though, which is that the best data about this subject, or a lot of it, is at Facebook. And I think there's a good question about whether Facebook even really has the incentive to dig too deeply into this.

We know that when it has shared data with researchers in the past, it's caused privacy issues. Cambridge Analytica essentially began that way, and yet this feels really, really important to me. So I'm just wondering, what are the internal discussions about research? Does the company feel like it maybe owes us more on that front, and is that something it's prepared to take on?

Yes, it does. I personally feel really strongly about this. I mean, look. Of course, Cambridge Analytica, it's often forgotten, was started by an academic legitimately accessing data and then illegitimately flogging that data on to Cambridge Analytica. And so of course that rocked this company right down to its foundations. And so of course, that has led to a slightly rocky path in terms of creating a channel by which Facebook can provide researchers with data.

But I strongly agree with you, Casey. These are issues which are not only important to Facebook. They're societally important. I just don't think we're going to make progress unless we have more data, more research independently vetted so that we can have a kind of mature and evidence-based debate.

Look, I do think we're getting a lot better. The time I've been here, I really believe we're starting to shift the dial. Last year, we provided funding to, I think, over 40 academic institutions around the world looking at disinformation polarization.

We've helped launch this very significant research project into the use of social media in the run-up to the US elections last year. Hopefully, those researchers will start providing the fruits of their research during, I think, the summer of this year. We've made available to them unprecedented amounts of data. There are always going to be pinch points where we feel that researchers are in effect scraping data, where we have to take action.

Candidly, we are legally and duty bound, not least under our FTC order to do so. I hope we can handle those instances in a kind of grown-up way, whilst at the same time continuing to provide data in the way that you described. I would really hope, Casey, that if you and I were to have this conversation in a year or so, you and I would be able to point to data which emanates from Facebook but has been freely and independently analyzed by academics in a way that has not been the case in recent years.

What will it mean for Facebook if the board restores Donald Trump?

Well, technically and narrowly, if they say, "Facebook, thou shalt restore Donald Trump," then that is what we will do, because we have to. We've been very, very clear at the outset. The oversight board is not only independent, but its content-specific adjudications are binding on us. Beyond that, as I said, and look, I know, of course, the decision they make about Trump will grab all, quite rightly, the headlines.

But I actually hope that actually there is wider guidance on what we should do going forward in analogous similar cases will be as if, if not more significant because we're trying to grapple with where we should intrude in what are otherwise quintessentially political choices. And we're anxious for their guidance, and we will then cogitate on that guidance ourselves and then provide our own response over a period of time.

But in terms of the specific up or down decision, that is something, assuming that they're going to be clear one way or another, where we will have to abide by their decision. Our rules, in terms of violations, strikes, and all the rest of it, they will remain in place. But our hands quite deliberately and explicitly are tied as far as specific individual content decisions that they make are concerned.

The Ratio

Today in news that could affect public perception of the big tech companies.

⬆️ Trending up: Google canceled April Fool’s Day pranks for the second year in a row. Normalize not pranking people! (Sean Hollister / The Verge)

⬆️ Trending up: YouTube finally removed Steven Crowder from its partner program after years of videos in which he harassed other creators and posted racist rants. You’d think he would be banned by now, but apparently this is not even the first time he has been removed from the partner program. (Sean Hollister / The Verge)

Governing

⭐ Facebook removed a video posted to the page of Lara Trump for featuring the voice of her father-in-law, Donald Trump. Mitchell Clark explains at The Verge:

Donald Trump has been kicked off of Facebook a second time after Media Matters for America raised questions about his appearances on daughter-in-law Lara’s page. Facebook has removed an interview with the former president that was posted by Lara Trump, who is married to Eric Trump. Lara posted screenshots of emails that appear to be from Facebook (complete with an obligatory 1984 reference), saying that a video of the interview was removed because it featured “the voice of Donald Trump,” along with a warning that posting further content would result in account limitations.

The full text of the purported email from Facebook is below:Hi folks,

We are reaching out to let you know that we removed content from Lara Trump’s Facebook Page that featured President Trump speaking. In line with the block we placed on Donald Trump’s Facebook and Instagram accounts, further content posted in the voice of Donald Trump will be removed and result in additional limitations on the accounts.

Google promised not to order employees to avoid discussing their salaries as part of a new settlement with the National Labor Relations Board. It settled a “complaint filed by the Alphabet Workers Union in February alleging that management at the data center forbid workers from discussing their pay and also suspended a data technician, Shannon Wait, because she wrote a pro-union post on Facebook.” (Josh Eidelson / Bloomberg)

The Arizona bill that would have required Apple to allow third-party app stores on the iPhone appears to be dead. “The legislation disappeared before a scheduled vote last week, and now it’s likely done for the year.” (Nick Statt / The Verge)

Four Republican U.S. lawmakers requested that Facebook, Twitter, and Google turn over any studies they have done on how their services affect children’s mental health. The move comes amid news that Facebook is building a version of Instagram for children. (Paresh Dave / Reuters)

The Korea Fair Trade Commission will fine Apple for allegedly interfering in an antitrust investigation. “A strange report today claims that an Apple executive attempted to ‘physically’ deter a Korean antitrust probe by an investor.” (Ben Lovejoy / 9to5Mac)

Industry

⭐ Discord’s new Clubhouse-like feature, Stage Channels, is now available. And just as Clubhouse was wrapping its brain around the new threat posed by LinkedIn! Here’s Jay Peters at The Verge:

If you’ve used Discord before, you might know that the app already offers voice channels, which typically allow everyone in them to talk freely. A Stage Channel, on the other hand, is designed to only let certain people talk at once to a group of listeners, which could make them useful for more structured events like community town halls or AMAs. However, only Community servers, which have some more powerful community management tools than a server you might share with a few of your buddies, can make the new Stage Channels.

The feature’s broad availability makes Discord the first app to offer an easy way to host or listen in on social audio rooms on most platforms. Clubhouse is still only available on iOS, though an Android version is in development.

Some Google workers will begin returning to their US offices in April. The announcement comes after similar moves from Facebook and Microsoft. (Ina Fried / Axios)

Trust in the technology industry cratered over the past year, according to a new survey. “Social media companies, which weren't included as a category in past years, achieved a trust score of 46, putting them below all other categories of businesses in the rankings.” (Ina Fried and Mike Allen / Axios)

A winning profile of Dapper Labs, the company behind NFT trading card phenomenon Top Shot. The company raised $305 million this week, bringing its cumulative total to $357 million. (Elizabeth Lopatto / The Verge)

Periscope shut down today after six years of live streaming video. RIP, little streamer. You were a lot of fun. (Jacob Kastrenakes / The Verge)

Those good tweets

what’s the word for when you’re very smart but can’t remember anything and know literally nothing

— rachelle toarmino (@rchlltrmn) 7:32 PM ∙ Mar 28, 2021

Just to confirm... everyone feels tired ALL the time no matter how much sleep they get or caffeine they consume, but also has trouble falling asleep / is constantly hungry but also nauseous with acid reflux / spends every second working or cleaning yet nothing gets accomplished?

— Kenyon Laing (@KenyonLaing) 2:11 AM ∙ Feb 9, 2021

— Tempa (@QuickestTempa) 7:25 AM ∙ Mar 26, 2021

this is fine, I love online dating

— mollz (@mollydeez) 3:04 AM ∙ Mar 18, 2021

Talk to me

Send me tips, comments, questions, and long Medium posts: casey@platformer.news.