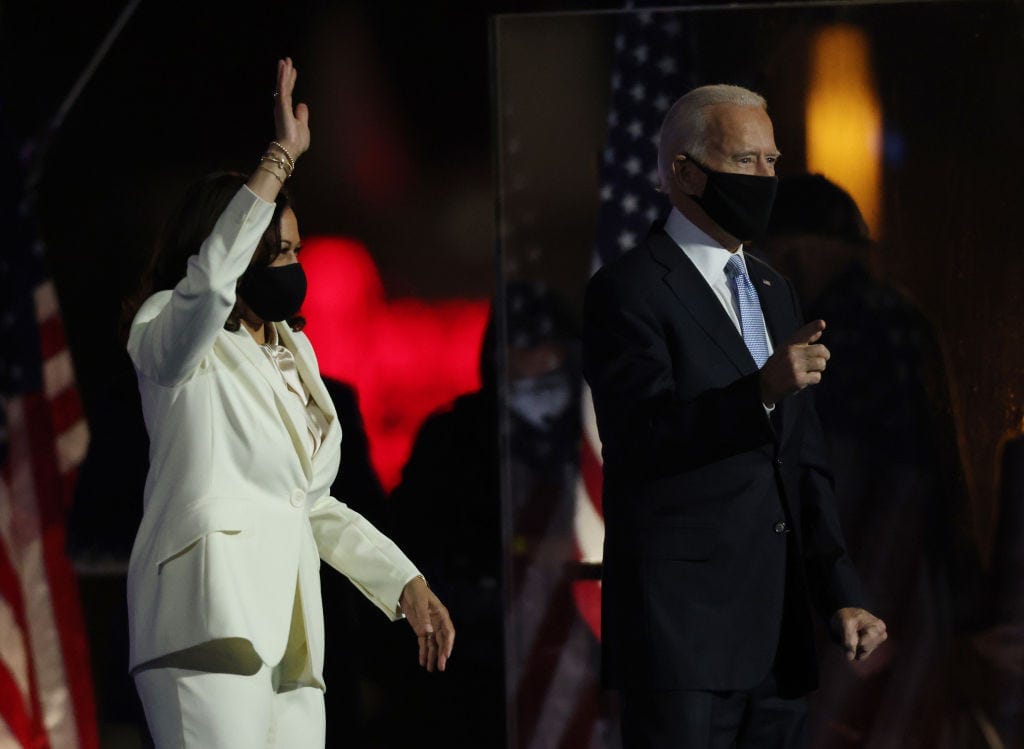

Why the 2020 election won't end

Republicans won't accept the outcome. What's a tech platform to do?

One assumption that many of us had about the 2020 US presidential election is that at some point it would end. This may have been overly optimistic; after all, in many ways — and certainly in this column — the 2016 US presidential election never really ended. We spent four (productive!) years discussing the degree to which foreign interference, particularly on social platforms, had affected the outcome. I imagine we’ll continue discussing it for some time to come.

But if the past election was litigated ad infinitum in columns, this one will be litigated first in courts. President Trump’s legal team has rolled out various challenges in the battleground states in which he is behind, and Republicans are backing those challenges with growing enthusiasm.

Senate Majority Leader Mitch McConnell declined to recognize Joe Biden’s victory and said Trump is “100 percent” entitled to challenge it. A coalition of 10 Republican attorneys general filed an amicus brief on Monday urging the Supreme Court to intervene in a case that could limit the counting of mail-in ballots in Pennsylvania. In Georgia, both Republican senators called upon the state’s top elected official to resign over unspecified “failures.” (Georgia’s secretary of state is a Republican; Biden appears to have won the state.)

Some observers have regarded these disputes so far as a joke, or a joke that is also a grift. Denying the results of the election allows Trump to raise continue fundraising, and there are many reasons he would want to fundraise as aggressively as possible. (The fine print of an email supporters noted that 60 percent of proceeds would go to paying down campaign debt.) The president himself is said to have discussed the possibility of a 2024 run for office, suggesting that he might have accepted temporary defeat, at least.

But there are new signs of something much more worrisome taking place, and they have significant implications for the democracy — and for the tech companies that will be called upon to referee what comes next. Writing in Vox, Ezra Klein makes a persuasive case for what will transpire over the coming weeks, months, and years:

That this coup probably will not work — that it is being carried out farcically, erratically, ineffectively — does not mean it is not happening, or that it will not have consequences. Millions will believe Trump, will see the election as stolen. The Trump family’s Twitter feeds, and those of associated outlets and allies, are filled with allegations of fraud and lies about the process (reporter Isaac Saul has been doing yeoman’s work tracking these arguments, and his thread is worth reading). It’s the construction of a confusing, but immersive, alternative reality in which the election has been stolen from Trump and weak-kneed Republicans are letting the thieves escape.

This is, to borrow Hungarian sociologist Bálint Magyar’s framework, “an autocratic attempt.” That’s the stage in the transition toward autocracy in which the would-be autocrat is trying to sever his power from electoral check. If he’s successful, autocratic breakthrough follows, and then autocratic consolidation occurs. In this case, the would-be autocrat stands little chance of being successful. But he will not entirely fail, either. What Trump is trying to form is something akin to an autocracy-in-exile, an alternative America in which he is the rightful leader, and he — and the public he claims to represent — has been robbed of power by corrupt elites.

This “autocracy-in-exile” seems likely to manifest itself as a media operation — perhaps by licensing Trump’s name to the One America News Network, as Dan Sinker speculates; perhaps by signing a deal with Fox News. This is where the second phase of the forever war over the 2020 election will begin, assuming it dies in the courts, and where I think the platforms will struggle as they evaluate their options.

Trump’s promise not to accept a losing result in advance of the election gave companies ample time to make policy enforcement plans, which they largely upheld. Trump’s baseless posts about voter fraud showed up right on schedule; Twitter hid them behind warnings, and Facebook and YouTube slapped them with labels announced that Joe Biden had been projected as the election’s winner.

The assumption has been that these labels would only be temporary. That at some point, Biden will be seated in office, and claims that the 2020 election was stolen can be safely dismissed as crackpot shitposting.

It is time to assume the opposite. That these false claims will endure for the next four years and beyond. That accepting them as true will become a loyalty test for any Republican seeking office. And that they will be repeated by top Republican elected officials, broadcast daily in right-wing media, and generate millions of interactions in frothing-at-the-mouth posts across every social network and video platform.

We have already seen a preview of this phenomenon in the Facebook group “Stop the Steal,” which amassed an incredible 360,000 members in 48 hours. To its credit, Facebook removed the group under its policy against organizations that undermine civic integrity. But what happened next underscored the magnitude of the whack-a-troll challenge that Facebook and other platforms now face. Keith Wagstaff had the story at Mashable:

On Friday morning, two more “Stop the Steal” Groups — one with more than 84,000 members, the other with more than 46,000 — took its place. They occupied the top two spots on a list of Groups with the most interactions on Facebook on Friday, according to CrowdTangle, an analytics tool owned by Facebook. Both were created on Nov. 5.

And late Monday afternoon, Facebook removed a network of pages linked to former Trump chief strategist Steve Bannon that used “stop the steal” messaging in posts to 2.45 million followers.

Last month, when Facebook belatedly banned QAnon groups, I wrote about the challenge platforms have in deciding when to act in cases like these. There was a moment when QAnon was just a series of absurd posts on a third-rate web forum, and a moment when it had become a violent social movement, no perfect time in between to declare all discussion of it forbidden on Facebook. But in the meantime, QAnon grew dramatically there and other platforms, and it now looks to become an enduring undercurrent in our politics.

With the arrival of 2020 election denialism, the same systems will be tested. Only this time, instead of rising up slowly from the internet’s murkiest corners, they will be coming down rapidly from the Senate majority leader, the House minority leader, the Department of Justice, and countless other mainstream political figures. The threats to civic integrity will arguably be even greater in coming weeks than they were in the run-up to the election.

In the meantime, a survey this week found that 7 in 10 Republican voters say the 2020 election was not free or fair. Trump supporters are already marching in the streets, citing debunked videos that were shared widely on social networks. Two men were arrested with guns (and a truck bearing QAnon stickers) outside a Philadelphia voting center. The prospect of violence grows more likely with every Republican official who encourages voters to reject the outcome.

In the run-up to the 2020 election, platforms made admirable commitments to the democratic process — registering millions of people to vote, reminding them to get to the polls, and offering accurate, real-time information about election results as they came in. Over the weekend, it became clear that as necessary as that was, it will not be sufficient to protect democracy in the weeks to come.

Whenever possible, platform policies tend to default to centrism. But as Republicans attempt to overturn voters’ will — before January 20 and after — centrism will not suffice. I’m grateful for how far platforms have come on this subject to date — and more than a little worried about how much farther they may yet need to go.

The Ratio

Today in news that could change public perception of the big tech companies

⬆️ Trending up: Facebook will require moderators of groups that repeatedly share misinformation to manually approve each post as part of an effort to slow the spread of conspiracy theories. (Heather Kelly / Washington Post)

⬆️ Trending up: Twitter has applied warning labels to President Trump’s tweets almost continuously since he began posting disinformation about the election outcome. In a high-profile case, the company has enforced its policy effectively and quickly. (Russell Brandom and Adi Robertson / The Verge)

⬇️ Trending down: YouTube declined to remove videos that boosted a conspiracy theory that Arizona ballots were being wrongfully invalidated. One video had more than 400,000 views; YouTube demonetized it. (Drew Harwell / Washington Post)

Governing

⭐ Thousands of Facebook groups buzzed with calls for violence ahead of the US election. Increased chatter led the company to roll out measures designed to slow the spread of inflammatory posts. Katie Paul has the story at Reuters:

A survey of U.S.-based Facebook Groups between September and October conducted by digital intelligence firm CounterAction at the request of Reuters found rhetoric with violent overtones in thousands of politically oriented public groups with millions of members.

Variations of twenty phrases that could be associated with calls for violence, such as “lock and load” and “we need a civil war,” appeared along with references to election outcomes in about 41,000 instances in U.S.-based public Facebook Groups over the two month period.

Facebook removed networks of inauthentic accounts spanning eight nations. In October the company removed some 8,000 pages connected to networks in Iran, Afghanistan, Egypt, Turkey, Morocco, Myanmar, Georgia, and Ukraine. (Reuters)

The Iranian regime is investing more resources in policing Instagram, forcing users to delete photos in which women are not wearing hijabs and pledge fealty to the Islamic Republic in their bios. The app has more than 24 million users in Iran, including some political users, but attention to it has increased as usage soared during the pandemic. (Mehr Nadeem / Rest of World)

Pinterest, LinkedIn and NextDoor are among the smaller social networks that saw a surge in misinformation during the election. Sites with smaller moderation teams often take brute-force measures in response, blocking all search results for problematic hashtags. (Cat Zakrzewski and Rachel Lerman / Washington Post)

Two men were arrested over the weekend near a Philadelphia voting center with QAnon stickers on their truck and an AR-15-style rifle in their truck. The men’s social media posts expressed enthusiasm for QAnon and for the “Stop the Steal” campaign on Facebook. (Tom Winter, Ben Collins, Daniel Arkin and Brandy Zadrozny / NBC)

Zoom settled with the Federal Trade Commission after making deceptive claims about its security features. The company agreed to implement stronger security measures, and recently rolled out end-to-end encryption. (Zack Whittaker / TechCrunch)

President Trump fired the secretary of defense in a tweet. Not great. (Missy Ryan and Dan Lamothe / Washington Post)

Steve Bannon’s show was suspended from Twitter, and an episode of it was removed by YouTube, after he called for violence against FBI director Christopher Wray and government pandemic expert Anthony Fauci. (Devin Coldewey / TechCrunch)

Here are just some funny TikToks about the slow ballot count in Nevada. (Lauren Strapagiel / BuzzFeed)

Industry

Tech executives are counting on Joe Biden to return a sense of normalcy to the presidency — but his arm’s-length approach to the industry makes his next moves somewhat hard to predict. Among things the industry is counting on: relaxed immigration restrictions; championing net neutrality; and Section 230 reform. Less certain: his approach to antitrust. (Issie Lapowsky / Protocol)

Apple will require new “nutrition labels” for privacy beginning December 8. I’ll mostly be watching to see the extent to which Apple uses these to embarrass rivals. (Chance Miller / 9to5Mac)

Spotify appears to be building toward a subscription podcast business. The company is surveying users about their willingness to pay $3 to $8 more per month for premium podcast features. (Jacob Kastrenakes / The Verge)

Chinese apps are increasingly building in social features to promote commerce. Ahead of the massive Singles Day shopping holiday, apps are encouraging users to share virtual shops with friends in exchange for rewards. (Xinmei Shen / South China Morning Post)

Those good tweets about Four Seasons Total Landscaping

(Context.)

Philly's hottest club is Four Seasons Total Landscaping. They've got everything: a crematorium, a dildo store, Rudy Giuliani

— Drivont (@Drivont) 3:05 PM ∙ Nov 8, 2020

Yes, I went to Harvard, Harvard Total Landscaping.

— Alexia Bonatsos (@alexia) 3:45 PM ∙ Nov 8, 2020

Ha ha ha OMG! The grand debut of Four Seasons Total Landscaping was AMAZING! Thank you to everyone who showed up!

— coopertom (@thecoopertom) 8:04 AM ∙ Nov 9, 2020

For your Zoom call this week.

— Jill Blake (@biscuitkitten) 6:45 AM ∙ Nov 9, 2020

From a local landscaping company. 😁

— Catherine Lundoff (@clundoff) 8:03 PM ∙ Nov 9, 2020

I work at Four Seasons Total Landscaping in PA

— Christine Nangle (@nanglish) 1:05 AM ∙ Nov 8, 2020

Unfortunately, I accidentally bought stock in Pfizer Total Landscaping

— wolf (@hungry_cap) 2:04 PM ∙ Nov 9, 2020

Talk to me

Send me tips, comments, questions, and landscaping tips: casey@platformer.news.