YouTube's Neal Mohan on the next phase of the misinformation fight

PLUS: I'm co-hosting a new podcast for the New York Times (!)

Programming note: Platformer is off Monday for Presidents’ Day.

Today, let’s talk about YouTube’s latest thinking on what to do about misinformation — and what other platforms might learn from the open-minded approach it’s taking.

On Thursday the company’s chief product officer, Neal Mohan, published an unusually compelling post about the company’s efforts to fight misinformation. I say “unusual” because it suggested a series of possibilities, rather than definitive actions. Usually when a big platform decides to talk about efforts to fight harmful content, it lays out a series of immediate next steps and says little about the future. Mohan, on the other hand, speculated about some things that YouTube might do — inviting a dialogue on the best way big platform ought to handle these issues.

I really like this approach. More than five years after our reckoning over big tech began, platforms are beginning to get more comfortable admitting that they don’t always know what to do. That in many cases they have no good choices, and any action they take to make the platform safer could have negative consequences for a variety of users. At the same time, companies have learned a lot about how their platforms are misused over the past half-decade, and they’re discussing potential solutions with increased sophistication.

At the same time, misinformation itself is changing, Mohan told me.

“The way the landscape is shifting is moving from very broad, stable misinformation narratives — 9/11 truthers, flat earthers, man on the moon stuff — to much more niche, much more quickly evolving narratives,” he said. “There were several around the election that were quintessential in that regard. … Multiply that by a couple hundred countries and it turns into quite a complicated picture.”

On Wednesday, Mohan and I hopped on Google Meet to talk about the company’s latest thinking. Here’s what I learned.

The company wants to catch more misinformation before it goes viral. To do so, it’s putting a lot of pressure on its machine-learning systems.

Talking to Mohan, I kept thinking of “Plandemic,” a video of false conspiracy theories that reached 8 million views in May 2020 before YouTube removed it from the platform. The obvious question in the aftermath of that incident was how it managed to attract such a big audience before YouTube noticed. And the answer is that, by YouTube’s standards, 8 million views isn’t all that much. (The same day it was pulled, a three-day-old music video for a song called “Gooba” hit 103 million views.)

That’s why YouTube wants to lean more on machine learning. Mohan and I didn’t get too far into the details, but the basic idea is to use the platform’s existing knowledge of misinformation to get better at identifying new niche narratives that may be problematic.

“It’s about taking some of those early-warning type signals, even before they become videos on our platforms in some cases,” Mohan said. “And using that to train classifiers that can be focused on individual, niche narratives.”

This is still arguably less of a hands-on approach than something like TikTok, which applies stricter, more human moderation to which videos are eligible for its central For You feed. But every platform could stand to develop good early-warning systems for influxes of false narratives, and I hope YouTube will share more about what it learns here.

YouTube wants to do something about the conspiracy videos that go viral because they’re shared on Facebook and other big platforms.

Returning to “Plandemic” again — it didn’t get 8 million views because YouTube promoted it. Rather, it got 8 million views because it was repeatedly shared in Facebook groups, many of them devoted to QAnon.

This revealed the extent to which YouTube’s choice to serve as a library for videos that are too bad to recommend — but not bad enough to remove — can make it an unwitting accomplice in the growth of QAnon and other conspiracy movements. And so the company is now thinking through what it can do about that cross-platforming sharing.

Mohan floated what struck me as some fairly daring ideas here, including disabling sharing links or embeds for videos that YouTube decides to stop recommending. As he notes in his post, there are some clear downsides here — among other things, it could make it harder for researchers to organize collections of videos for academic or journalistic purposes, or simply to refute them in a blog post or video.

A less disruptive solution could be to add an interstitial pop-up that informs viewers that what they’re about to watch may contain misinformation. At the moment, YouTube hasn’t figured out what those interstitials might say. And Mohan worries that, while less disruptive than breaking links, interstitials may also be ineffective.

“That’s decent, but is it sufficient? Does it actually change anything?” he said. (The data on these sort of content warnings across the industry appears to be murky at best, though as far as I can tell it hasn’t been closely studied.)

On the whole, I think I prefer interstitials to broken links. Particularly if they’re specific — “Facebook QAnon groups have driven significant traffic to this video, which may contain misinformation” is very good context to have before watching something! The question is whether YouTube can get that information in real time, or feel comfortable sharing it — in all 100 countries where it operates.

Speaking of which …

YouTube says it’s going to scale up its fight against misinformation in countries outside the United States.

One of the most disconcerting revelations in last year’s Facebook Papers was the degree to which Americans get a high standard of content moderation, while many other countries are forced to make do with a lower one. Platforms often choose to operate in countries where they have little expertise in local politics, and lack moderators who speak all of the languages spoken by their users.

Mohan says YouTube is “exploring” new partnerships with local experts and non-governmental organizations in countries around the world. It’s also updating its machine-learning models to look for misinformation in local languages.

“You should expect us to be robust and global — not just in terms of our policies, but also all of these product-based solutions around raising up authoritative content and reducing borderline content,” Mohan told me.

This one doesn’t mean much without further action on YouTube’s part. But now that the company has committed to it, it can be held accountable for it. I’ll take it.

All of this applies to Shorts, too.

YouTube is clearly thrilled with the early progress it’s seeing from its TikTok competitor, Shorts. And one question readers have raised for me is whether the company’s various misinformation policies and enforcement techniques will apply to short-form videos as well.

Mohan tells me they do.

“The good news is that I think a lot of the foundational elements, both in terms of principles as well as in terms of technology, will apply in almost exactly the same way around Shorts as they do with [traditional videos],” he said.

Of course, it would be great if YouTube and other platforms had already developed sophisticated solutions to catch every hoax as it goes viral, reduce its spread across platforms, and scale those technologies around the world. But there’s also value in platforms acknowledging the current state of affairs, soliciting feedback, and committing to sharing what they find.

The important thing, Mohan said, is to acknowledge that determining what’s true is an imperfect science, and to set policies accordingly.

“A lot of these narratives have evolved to have elements of false and truth in them. It's a much more murky space,” he said. “And so the constant struggle, or trade-off, is this balance — to use a sort of overused term, this freedom of speech versus freedom of reach.”

Announcements

From the day I started Platformer, I’ve been looking for a podcast project to complement the daily newsletter and extend it into audio. Specifically, I’ve wanted to do a show with a great co-host, about important but under-covered terrain, that intersects with my interest in tech platforms and governance. A show that’s smart and journalistic, skeptical but open-minded, and also fun: something you look genuinely forward to listening to.

Today I’m thrilled to tell you that such a project is now in the works: I’ll be co-hosting a weekly chat show for the New York Times with my friend Kevin Roose. And while I can’t tell you everything about it today, I can tell you that we’re going explore into some of the subjects that you’ve been most interested in on Platformer in recent months: the biggest stories in NFTs, DAOs, web3, the metaverse, and other strange (and non-crypto) parts of our weird new economy. You know how, at least once a week, there is some new economic or technological phenomenon that makes us all collectively say: what??

The show is about that.

To make a great show, we need a great producer. Kevin and I are going to make the show in person here in the Bay Area, and we’d love to work with someone local who can sit in the room with us and make the best possible pod. The job description just went live — please share it far and wide.

And if you don’t know a good producer, maybe suggest some great guests.

I remain totally committed to Platformer, and nothing about the newsletter will change as we develop the show. I will likely be looking for help putting together Platformer in coming weeks, though, so stay tuned for another job description here soon.

Governing

⭐ Donald Trump’s Truth Social app has entered private beta. Some 500 lucky souls have been able to test the Twitter clone to date. Here’s Helen Coster at Reuters:

Truth Social allows users to post and share a “truth” the same way they would do so with a tweet. There are no ads, according to Willis and a second source familiar with TMTG.

Users choose who they follow and the feed is a mix of individual posts and an RSS-like news feed. They will be alerted if someone mentions or begins following them.

The United Kingdom Home Office is pushing for new regulations on platforms that would require platforms to proactively monitor for threats and hoaxes like bomb scares. Critics say the rules represent overreach, and could conflict with data privacy laws. (Tim Bradshaw, Jasmine Cameron-Chileshe and Peter Foster / Financial Times)

A bipartisan pair of senators introduced a new online safety bill aimed at protecting children. Lots in here, but this made me blink a few times: “Companies would also have to offer parents and minors the ability to modify tech companies’ recommendation algorithms, allowing them to limit or ban certain types of content.” What? (Cat Zakrzewski / Washington Post)

Nick Clegg has been appointed Meta’s president of global affairs. The move is intended to give Mark Zuckerberg more time to focus on product, and Sheryl Sandberg on business. (Kurt Wagner / Bloomberg)

Clearview AI is telling investors it plans to expand far beyond law enforcement uses. The company has slurped up 10 billion images, and says future businesses could include “identity verification or secure-building access.” (Drew Harwell / Washington Post)

Criminals now own $11 billion worth of cryptocurrency stemming from known illicit sources, up from $3 billion at the end of 2020. Crime pays, though as Razzlekhan learned the hard way, it can be very difficult to spend. (Chainalysis)

Cryptocurrency trading is booming after India began taxing it at 30 percent, signaling that the government considers it legitimate. “Binance-owned WazirX, India’s largest crypto bourse, has seen daily sign-ups on its platform jump almost 30 percent since Feb. 1.” (Ruchi Bhatia and Suvashree Ghosh / Bloomberg)

A coalition of big crypto companies announced a new platform for securely sharing customer information in order to comply with anti-money laundering laws. BlockFi, Circle, and Coinbase are among the participants. (Aislinn Keely / The Block)

A look at the security risks of Otter.ai, beloved by journalists for its automated interview transcriptions. While convenient to use, the transcripts are available for seizure by law enforcement in ways that are not always transparent to users. (Phelim Kine / Politico)

Industry

⭐ Google announced its answer to Apple's disruptive App Tracking Transparency feature, but said it would phase out current methods over two years. Advertisers hope that will give them the time necessary to adapt. Here’s Daisuke Wakabayashi at the New York Times:

Anthony Chavez, a vice president at Google’s Android division, said in an interview before the announcement that it was too early to gauge the potential impact from Google’s changes, which are meant to limit the sharing of data across apps and with third parties. But he emphasized that the company’s goal was to find a more private option for users while also allowing developers to continue to make advertising revenue. […]

Mr. Chavez said that if Google and Apple did not offer a privacy-minded alternative, advertisers might turn to more surreptitious options that could lead to fewer protections for users. He also argued that Apple’s “blunt approach” was proving “ineffective,” citing a study that said the changes in iOS had not had a meaningful impact in stopping third-party tracking.

Related: Here’s an in-depth, lightly positive analysis of the move from Eric Seufert.

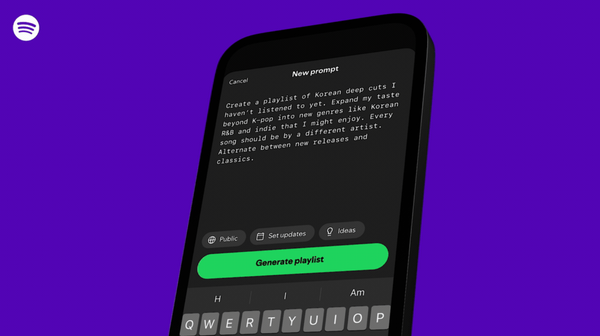

Spotify’s Joe Rogan deal was worth at least $200 million, double what was previously reported. Puts the $100 million that the company promised to underrepresented creators last week into a much less flattering perspective. (Katherine Rosman, Ben Sisario, Mike Isaac and Adam Satariano / New York Times)

Spotify bought two companies that specialize in podcast marketing and ad tech attribution. Chartable and Podsights could help the company build a dominant ad-tech stack in audio. (Ashley Carman / The Verge)

Microsoft removed social hubs from its AltSpaceVR platform as part of a set of efforts designed to improve safety and moderation. You’ll soon need a Microsoft account to log in, too. (Adi Robertson / The Verge)

Twitter added tipping in Ethereum. Please add an extra $100 to your intended tip to cover the gas fees. (Zack Seward / CoinDesk)

Twitter bot accounts can now optionally label themselves as such. I love this — such a simple way to build trust in the platform. (Kris Holt / Engadget)

Twitter is expanding the rollout of its Safety Mode feature to include about 50 percent of users. It offers additional account protections to users who find themselves to be Twitter’s main character of the day. (Jay Peters / The Verge)

Twitter CEO Parag Agrawal will take “a few weeks” of paternity leave. Love this. (Will Oremus / Washington Post)

A look at the decline of Google search for product reviews as its results page has become an SEO wasteland. As a result, smart searchers are adding “site:reddit.com” to their searches to hear from actual human beings. (dkb.io)

Google will finally stop defaulting your view to a physical sheet of paper in Docs. Allegedly. Still not enabled on my accounts. I’ll believe it when I see it! (Abner Li / 9to5Google)

Those good tweets

One reason I still have trouble believing crypto currency is money is that there aren’t commercials for money.

— Noah Garfinkel (@NoahGarfinkel) 2:07 AM ∙ Feb 14, 2022

SURELY I've accepted all possible cookies by now.

— Akilah Green (@akilahgreen) 8:01 PM ∙ Feb 14, 2022

The most embarrassing thing about Wordle is that when I don't get it in three I am convinced I am about to learn a brand new word and then it's like...THOSE

— Christina Grace (@C_GraceT) 8:06 PM ∙ Feb 14, 2022

hi twitter I just learned that the UK edition of dollar tree is this and I may never recover

— Margaret McDeadlines Owen (@what_eats_owls) 3:25 AM ∙ Feb 16, 2022

Talk to me

Send me tips, comments, questions, and YouTube misinformation: casey@platformer.news.