How platforms are handling the slop backlash

AI-generated media is generating millions of views. But some companies are beginning to rein it in

This is a column about AI. My boyfriend works at Anthropic. See my full ethics disclosure here.

Today, let's talk about how platforms are responding to the rise of slop.

To some AI insiders, the novelty of OpenAI's Sora video generation tool has begun to wear off. The videos feel same-y; the creative tools are limited; and also there's that nagging feeling that looking at Sora too long cooks your brain.

At the same time, two weeks after its release, Sora remains the No. 1 free app in the US App Store. And OpenAI is continuing to iterate on it, announcing on Wednesday that the app can now be used to generate videos of up to 25 seconds.

In Sora we find the entire debate over AI-generated media in miniature. On one hand, the content now widely derided as "slop" continually receives brickbats on social media, in blog posts and in YouTube comments. And on the other, some AI-generated material is generating millions of views — presumably not all from people who are hate-watching it.

The popularity of surreal AI genres such as "slicing through glass fruit," Italian brainrot, and shrimp Jesus helps to explain much of the disdain for them. If slop weren't occasionally compelling, we might feel like we could safely ignore it. But to some relatively large audience, they are, the 3 million views on this AI clip of a knife slicing through glass pancakes and waffles attest.

And so if you're the sort of person who prefers your internet man-made — or at least in healthy balance with AI models — the rise of slop understandably feels like a threat. Increasingly, a coalition of average people and copyright holders are pushing the platforms themselves to take action — and they're beginning to see some success.

One place where this has been true is Pinterest. The mood board site, which has been besieged by AI-generated imagery over the past year, on Thursday introduced a feature to allow users to limit (but not entirely block) AI-generated images in their recommendations. The change followed a move earlier this year to begin labeling AI-generated images as such.

As Sarah Perez noted at TechCrunch, Pinterest has come under fire from its user base all year for a perceived decline in quality of the service as the percentage of slop there increases. Many people use the service to find real objects they can buy and use; the more that those objects are replaced with AI fantasies, the worse Pinterest becomes for them.

Like most platforms, Pinterest sees little value in banning slop altogether. After all, some people enjoy looking at fantastical AI creations. At the same time, its success depends in some part on creators believing that there is value in populating the site with authentic photos and videos. The more that Pinterest's various surfaces are dominated by slop, the less motivated traditional creators may be to post there.

Spotify faces a similar challenge. The advent of AI music tools like Suno and Udio now lets anyone create a relatively cohesive song within seconds, often with voices that sound very similar to superstars. The result is that artists now have a new threat to monitor, lest someone clone three popular voices and create a song that briefly charts on Spotify — as was the case with this fake collaboration between Bad Bunny, Justin Bieber and Daddy Yankee.

In Rest of World, Laura Rodríguez Salamanca surveyed the music industry in Latin America and found that music slop is increasingly crowding out the real thing. One study she cites predicts that AI songs will account for 20 percent of streaming platform revenue by 2028.

"The music isn’t great, but it still sucks away limited streaming income from real artists," she writes. "The speed and volume of new AI music is now exhausting human artists and distracting listeners, said people in the Latin music industry."

Some artists are using AI in creative ways. In particular, tools like Suno seem to have inspired a new generation of parody songwriters, who have repeatedly gone viral on TikTok this year with profane songs designed to shock parents. (I haven't been able to get "Country Girls Make Do" out of my head all year.)

But the majority of AI-generated uploads to streaming services are likely spam. And under pressure from record labels to take action, Spotify said last month that it had removed 75 million spam tracks from the service over the past year. The company introduced stronger rules against impersonation, a new music spam filter, and a way for responsible artists to disclose their use of AI on a voluntary basis.

Like Pinterest, the deluge of AI content also represents an opportunity. Even as it fights to stem the tide of slop, today Spotify announced a partnership with the major record labels to develop "responsible" AI products. While the company offered few details, it said it is establishing a "generative AI research lab and product team," according to Steven J. Horowitz in Variety. It also seems to be planning a way for artists to opt in to letting fans use generative music tools with licensed music, available to artists who opt in.

Any platform that lets people upload media will find itself swimming in slop whether it wants to be or not. But for some companies, the slop is self-inflicted.

Last year Reddit introduced Reddit Answers, a ChatGPT-like chatbot with access to the platform's posts. It is now in beta in Reddit forums, and moderators cannot disable it.

That became an issue this week when a user in the r/FamilyMedicine subreddit suggested that users who are interested in pain management could try heroin. As Emanuel Maiberg writes at 404 Media, Reddit Answers also recommended that users try kratom, which is illegal in some states.

The company said today that it would no longer display Reddit Answers under "sensitive topics."

All of these platforms are generally designed to get you to spend more time there. The promise of synthetic media is that they will soon have a cheap, abundant resource that makes their products more compelling than ever before. For the moment, though, that abundant resource is also eroding their customers' trust at a rapid clip. At platform scale, a big surge of slop really can make everything worse — and tech companies are now beginning to take that threat seriously.

On the podcast this week: Kevin and I sort through California's sweeping effort to regulate social media and AI. Then, Encode general counsel Nathan Calvin joins us to discuss OpenAI's recent lawfare against its critics. And finally, we present the Hard Fork Review of Slop.

Apple | Spotify | Stitcher | Amazon | Google | YouTube

Sponsored

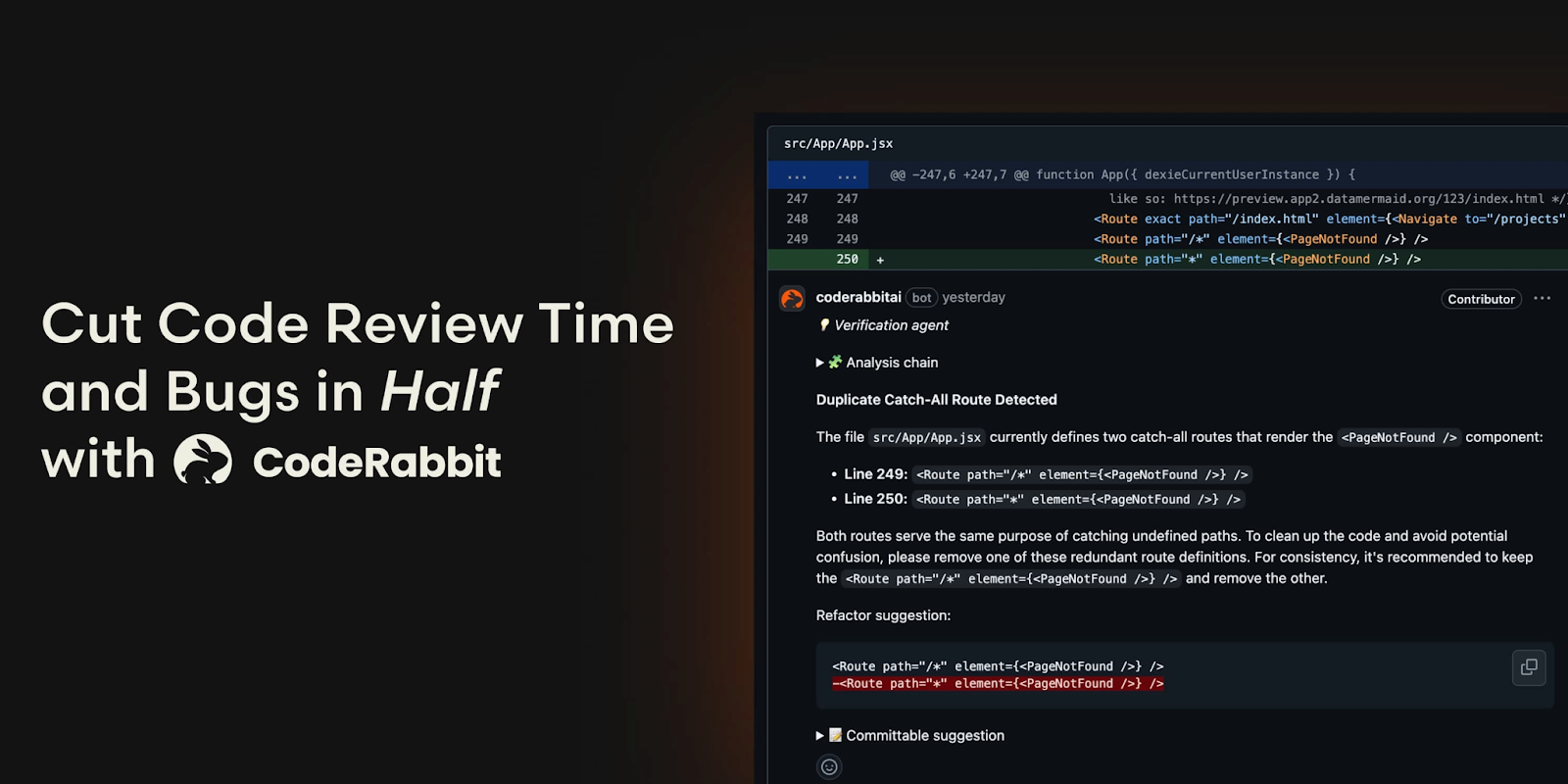

Cut Code Review Time & Bugs in Half

Code reviews are critical but time-consuming. CodeRabbit acts as your AI co-pilot, providing instant Code review comments and potential impacts of every pull request.

Beyond just flagging issues, CodeRabbit provides one-click fix suggestions and lets you define custom code quality rules using AST Grep patterns, catching subtle issues that traditional static analysis tools might miss.

CodeRabbit has so far reviewed more than 10 million PRs, installed on 1 million repositories, and used by 70 thousand Open-source projects. CodeRabbit is free for all open-source repos.

Following

The bubble discourse continues

What happened: Discussion about the swelling economic bubble in AI continues to pop ... off. This week the Financial Times reported on how a collection of ten money-losing startups — including OpenAI, Anthropic, and xAI — have gained roughly $1 trillion in valuation this year. AI investments account for about two-thirds of American venture capital investment this year, totaling $161 billion. VCs are saying that startups are seeking much higher valuations than their numbers would have warranted in prior tech booms, although they also said that during all previous tech booms.

For its part, OpenAI has made a five-year business plan for how it’s going to acquire the trillion-plus dollars it has already pledged to spend on AI technology. It includes selling bespoke products to governments and businesses, making new shopping tools, online advertising, and consumer hardware products. They’re also considering “creative” plans to raise new debt — another hallmark of a bubble.

Meanwhile, Meta continues to make big investments in AI infrastructure. The company announced it plans to spend $1.5 billion on a 1GW data center in Texas; the company will spend as much as $72 billion on infrastructure investments this year, much of which will go to AI. (That's still remarkably less than OpenAI plans to spend, assuming you believe them.)

As for Anthropic, this week the company was reported to hold funding talks with UAE investment firm MGX during CEO Dario Amodei’s tour of the Middle East. (The July controversy over that one, which resulted in the first major leak to the press out of that company, seems to have died down.) Anthropic is entering those talks with considerable momentum: Reuters reports that the company is on track to hit $9 billion in annualized revenue this year and projects as much as $26 billion annualized revenue next year. (It was at $1 billion in ARR in January.)

Why we’re following: AI is swallowing the economy — at least, the part that's growing quickly. Even as many investors and companies remain bullish, huge investments and odd financing schemes are raising some eyebrows. At the same time, revenue growth like Anthropic is now seeing will continue to put dollar signs in investors' eyes.

What people are saying: “Of course there’s a bubble,” Hemant Taneja, chief executive of venture capital firm General Catalyst, told the Financial Times. “Bubbles are good. Bubbles align capital and talent in a new trend, and that creates some carnage but it also creates enduring, new businesses that change the world.”

Discussing OpenAI’s business model, a senior OpenAI executive told the Financial Times that “right now I’d say there’s lots of fuzz on the horizon.” But they’re optimistic: “as it gets closer… it’s going to start to take real shape.”

Meanwhile, a viral X post by user account_blown announced “BREAKING: OpenAI to partner with OpenAI to help fund OpenAI. OpenAI up 90%.”

In a popular YouTube short, tech influencer CarterPCs expressed skepticism about Sora and OpenAI's ability to turn a profit: “They’re getting these hundreds of billions of dollars in investments and charging 9.99 for subscriptions. Something’s not gonna work there.”

YouTuber Hank Green released a video called The State of the AI Industry is Freaking Me Out. In particular, he expressed concern about circular investments –including those between Nvidia, OpenAI, xAI, and other AI companies.

“Is it true that the demand for Nvidia's chips is increasing, or is Nvidia creating that demand?” he asked. “Why does Nvidia have to invest in these companies in order for them to buy their chips?”

Crony capitalism watch

What happened: More than three dozen companies and their representatives, including those from from Microsoft, Meta, Google, and Amazon, attended a fundraising dinner at the White House Wednesday evening. The goal, according to the invitation: to “Establish the Magnificent White House Ballroom.” It will cost $250 million to build, and the tech companies are all too eager to pay up.

Thanks to YouTube’s shameful agreement in late September to settle a 2021 lawsuit over its suspension of Trump’s account after the Jan. 6 insurrection, Google has already funded the ballroom to the tune of $22 million.

Now, Senate Democrats want to know whether that settlement should be considered a bribe. Noting the ongoing antitrust case against the company, a group of senators sent a letter to Google CEO Sundar Pichai and YouTube CEO Neal Mohan to ask about how the settlement will influence the DOJ’s decision on whether to ask for stricter remedies for Google’s illegal ad and search monopolies.

Why we’re following: Even in a time when anyone with money and power can seemingly get away with anything, it's still a shock to see the world's biggest companies lining up to buy the president's favor. Transactions that would have been unimaginable as little as 10 months ago are now de rigeur. It's hard to imagine much coming of the Democrats' letter, but at least they bothered to send it.

What people are saying: Sen. Elizabeth Warren, one of the senators who signed the letter to Google, questioned the ballroom dinner on X: “What are these billionaires and giant corporations getting in exchange for donating to Donald Trump's $200 million ballroom? Do they think we're dumb enough to believe they're giving their money away for free?”

“Every company that is invited to that dinner that either doesn’t show or doesn’t give knows now they will be out of favor with the Trump administration,” Claire Finkelstein, a University of Pennsylvania law professor, told the Wall Street Journal.

Side Quests

How the Trump companies made $1 billion in crypto so far. (You will probably guess.) Trump’s TikTok deal could give the US government unprecedented sway on social media. The EFF sued the Trump administration over alleged mass surveillance of legal residents on social media.

White House AI czar David Sacks said Anthropic’s regulatory approach was based on “fear-mongering” and "regulatory capture." (Did he forget that he and his friends run the federal government?) Google allowed an Israeli influence campaign on YouTube claiming Gazans have access to food to remain online despite complaints.

Pew survey: most people around the world are more concerned than excited about AI, and trust their countries and the EU to regulate it more than they trust the United States or China.

Europe’s data center rollout is raising concerns about water scarcity. Japan asked OpenAI to refrain from infringing on anime and manga IPs. (I'm sure OpenAI will get right on that.) Tim Cook pledged to boost Apple’s investment in China. Chinese criminal operations made $1 billion over the last three years through text scams. The AI chatbots replacing India’s call-center workers. Microsoft reportedly plans to produce most of its products outside of China.

OpenAI plans to let you log in with ChatGPT. Sora 2 users can now generate up to 25 seconds of video, if you're on the Pro plan. Google introduced new capabilities in its AI filmmaking tool Flow alongside the release of Veo 3.1. Anthropic released Claude Haiku 4.5 and added Skills for Claude.

Meta partnered with Arm Holdings to power AI recommendations across its apps. Apple’s head of AI web search Ke Yang is reportedly leaving for Meta, dealing another blow to Apple's snakebitten AI efforts.

Jeff Bezos now owns less than 10 percent of Amazon after selling 100 million shares. Q&A with YouTube CEO Neal Mohan on his vision for the future of entertainment.

Meta added group chats to Threads. X will display more information on user profiles. Waymo is expanding to London.

Apple’s new lineup gets an M5 chip overhaul—including the new iPad Pro, the new 14-inch MacBook Pro, and the Vision Pro headset.

Black hole theoretical physicist Alex Lupsasca joined OpenAI’s science initiative. A Gemma model found a potential new pathway to treat cancer. Google’s Flood Hub helped Bangladeshi farmers predict floods early. How health providers use AI to quantify pain. What happens when facial recognition tech can’t recognize your face.

Those good posts

For more good posts every day, follow Casey’s Instagram stories.

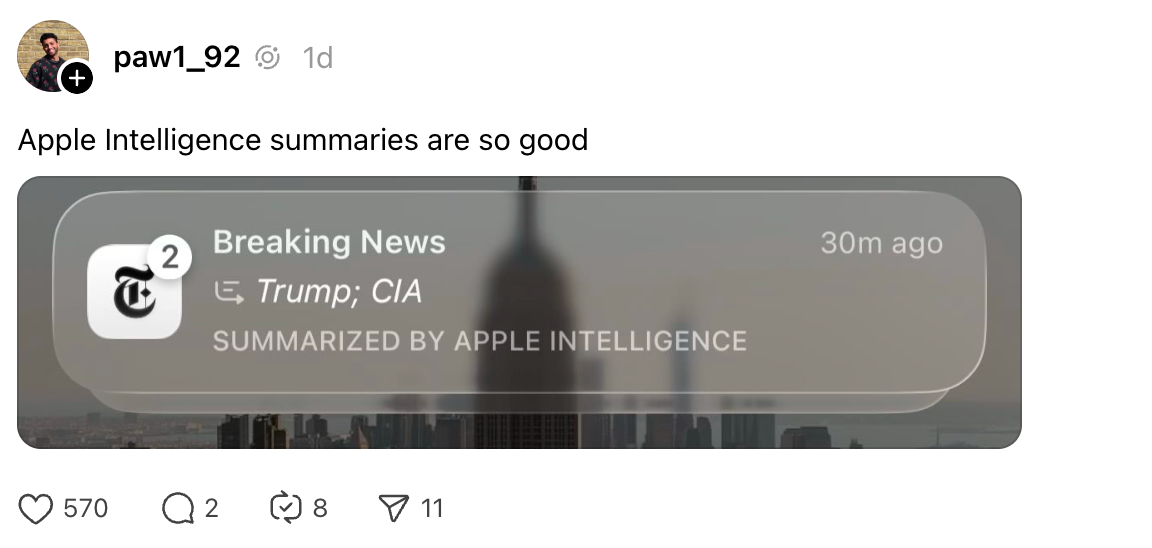

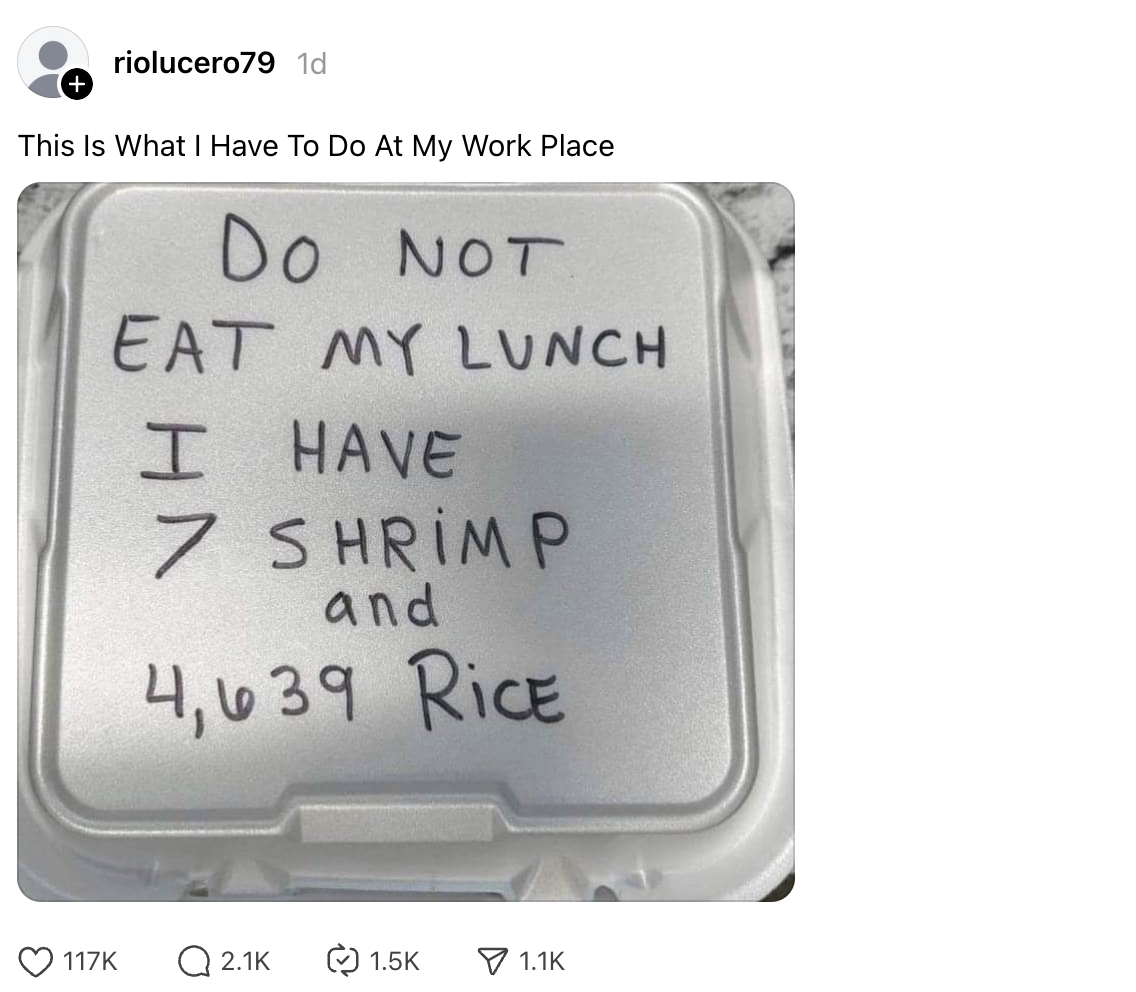

(Link)

(Link)

(Link)

Talk to us

Send us tips, comments, questions, and posts: casey@platformer.news. Read our ethics policy here.